SCC Kolloquien und Seminare

Beim SCC-Kolloquium halten Experten aus unterschiedlichen Institutionen, wissenschaftliche Vorträge über bedeutsame Themen aus Informatik-, Ingenieur-, Naturwissenschaften und Industrie. Dabei führen Studierende, Mitglieder und Auszubildende des SCC und aus anderen KIT-Instituten Streitgespräche und Podiumsdiskussionen über interdisziplinäre Themen aus Wissenschaft und Industrie, in denen Informationstechnologien eine bedeutsame Rolle spielen.

Das SCC-Seminar wird von SCC-Mitarbeitern gehalten. im Mittelpunkt des Seminars stehen aktuelle Forschungs- und Entwicklungsarbeiten am SCC, als auch die Planung von Kooperationen, Projekten, Veröffentlichungen, Doktor-, Master- oder Abschlussarbeiten, technische Neuerungen bei den Diensten und Infrastrukturen des SCC.

Für Vorschläge über zukünftige Vorträge und bei organisatorischen Fragen wenden Sie sich bitte an Achim Grindler und Horst Westergom.

Inhaltsübersicht

Inhaltsübersicht

Colloquium - Accelerating the Deployment of Renewables with Computation

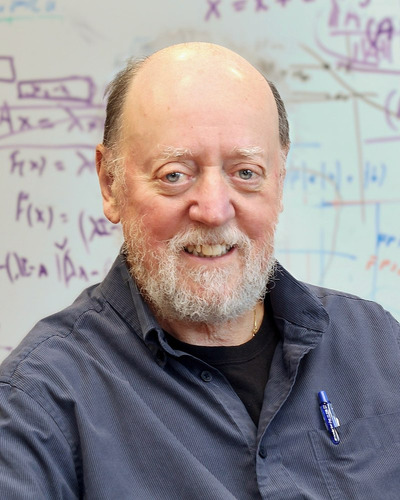

Talk by Prof. Dr. Andrew Grimshaw, Lancium Compute, Emeritus Professor of Computer Science, University of Virginia

Every MWh of electricity produced in the US today leads, on average, to 0.7 metric tons of CO2 emissions. That's the bad news; The good news is that renewable, carbon-neutral generation is now the least expensive means to produce electricity in the world. This has led to the development of tens of gigawatts of wind and solar capacity in the US. In West Texas alone, there is now so much excess power that wind and solar resources are often curtailed, leaving terawatt-hours of potential power unused.

In short, generating sufficient power with renewables is no longer the problem. Instead, the problem is leveraging this power. There are two significant challenges. First, the sun does not always shine, and the wind does not always blow, meaning that the current design of the electrical grid will have to change to accommodate fluctuating generation. Second, the best wind and solar sources are not proximate to sources of load, namely, population centers and heavy industry.

One way to address this second problem is to identify energy-intensive, economically viable industries, that can be feasibly moved to where the power is plentiful and that can accommodate the large amounts of variable generation provided by renewable energy. Computation, including Bitcoin, fits these requirements: computation turns electricity into value and requires only power and networking to operate. Computation can be paused, restarted, and migrated between sites in response to differences in power availability. In essence, data centers can act like giant batteries and grid stabilizers, soaking up power and delivering value when renewable sources are pumping out lots of power, and reducing consumption and stabilizing the grid when renewable sources are limited.

In this talk I begin with some electrical grid basics, stability, primary frequency response, ancillary services, and the Texas CREZ line. I then show how Bitcoin and computation more generally can be used as a variable and controllable load to stabilize the grid, consuming energy when it is inexpensive, and dropping load and releasing energy back to the grid (i.e. humans) when energy prices are high. Further, buying TWhs of otherwise unused energy causes renewable energy generation to become more profitable by providing a stable base load, spurring further renewable energy projects. This in turn increasing the availability of renewable energy even on cloudy and windless days.

About the speaker: Prof. Dr. Grimshaw received his PhD in Computer Science from the University of Illinois in 1988 and joined the Department of Computer Science at the University of Virginia. In his 34-year career at Virginia, Grimshaw focused on the challenges of designing, building, and deploying solutions that meet user requirements on production super computing systems such as those operated by the DoD, NASA, DOE, and the NSF. In addition to his academic career, Prof. Dr. Grimshaw has been a founder, or very early employee, of three startups: Software Products International, Avaki, and Lancium. Prof. Dr. Grimshaw retired this year from the University of Virginia to join Lancium and participate in their transformative mission to change how and where computing is done while decarbonizing the electrical grid.

----

You can also follow this colloquium via Zoom:

Zoom Link

Meeting-ID: 620 9094 7505

Kenncode: 350879

Joint Informatik/SCC Colloquium - A Not So Simple Matter of Software

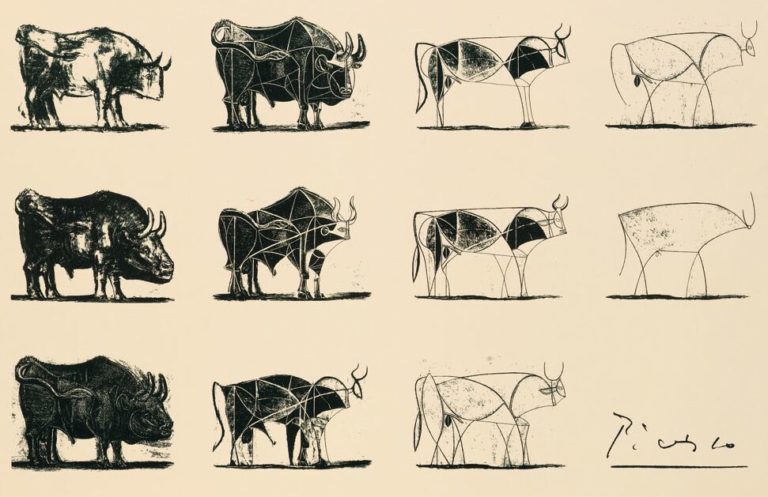

In this talk we will look at some of the changes that have occurred in high performance computing and the impact that is having on how our algorithms and software libraries are designed for our high-end computers. For nearly forty years, Moore’s Law produced exponential growth in hardware performance, and during that same time, most software failed to keep pace with these hardware advances. We will look at some of the algorithmic and software changes that have tried to keep up with the advances in the hardware.

About the speaker: Jack Dongarra holds an appointment as University Distinguished Professor of Computer Science at the University of Tennessee and holds the title of Distinguished Research Staff at Oak Ridge National Laboratory (ORNL). He specializes in numerical algorithms in linear algebra, parallel computing, the use of advanced-computer architectures, programming methodology, and tools for parallel computers. His research includes the development, testing and documentation of high quality mathematical software. In 2021 Dongarra has been named an A.M. Turing Award winner by the Association for Computing Machinery.

[Registration and online streaming access: www.scc.kit.edu/veranstaltungen/index.php/event/46692]

The talk, held at the TU Darmstadt, here: www.youtube.com/watch?v=QBCX3Oxp3vw

Colloquium - Accelerated Computing with NVIDIA GPUs

Accelerated computing is now everywhere and has become the only feasible way to achieve exascale computing within a useful power budget. Graphical Processing Units, or GPUs, are the most popular accelerators for accelerated computing. However, to achieve best performance on GPUs is a true programmatical challenge. With NVIDIA’s CUDA, programming GPUs has been made easier than never before. However, to achieve the best performance, one must understand clearly the hardware architecture of a GPU and fundamental difference to CPU programming. In this talk, we will introduce the concepts of accelerated programming, alongside with a deep dive into NVIDIA’s new Ampere architecture, and a brief introduction to the programming model of CUDA.

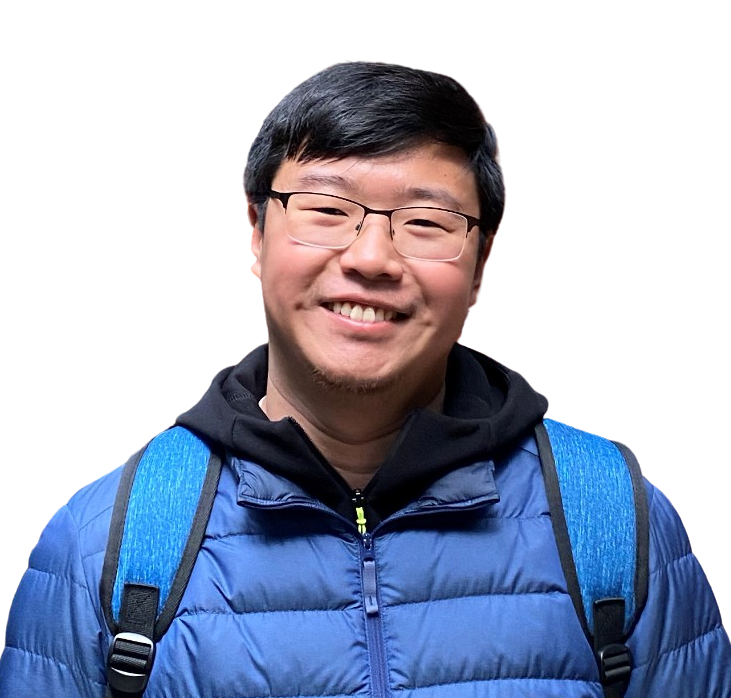

About the speaker: Dai Yang is a senior solution architect at NVIDIA. At NVIDIA, Dai is responsible for tailoring customer-optimized solutions with NVIDIA GPU and Networking products, with a strong focus on industry, automotive, and HPC customers. Dai is actively involved in planning, deployment, and training of DGX-solutions in the EMEA region, including the newly announced Cambridge-1 System. Prior to joining NVIDIA, Dai was research associate at Technical University of Munich, where he worked on various aspects of high-performance computing, and contributed draft for standard extensions of MPI to the MPI forum. Dai is part of the winner team of the Hans Meuer Award at ISC 2020. Dai holds a PhD degree in informatics from Technical University of Munich.

Zoom Video Meeting Specifications

Topic: SCC Kolloquium - Accelerated Computing with NVIDIA GPUs

Date & Time: 11.Nov.2020 01:45 PM Amsterdam, Berlin, Rom, Stockholm, Wien

Joining Zoom Meeting:

kit-lecture.zoom.us/j/66040077771

Meeting-ID: 660 4007 7771

Joint GridKa School and SCC-Colloquium ‑ Why the future of weather and climate prediction will depend on supercomputing, big data handling and artificial intelligence

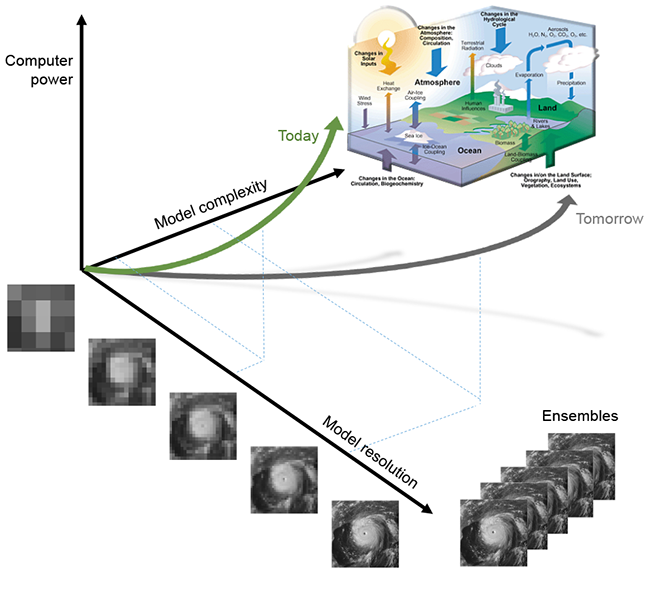

Weather and climate prediction are high-performance computing application with outstanding societal and economic impact ranging from the daily decision-making of citizens to that of civil services for emergency response, and from predicting weather drivers in food, agriculture and energy markets as well as for risk and loss management by insurances.

Forecasts are based on millions of observations made every day around the globe and physically based numerical models that represent processes acting on scales from hundreds of metres to thousands of kilometres in the atmosphere, the ocean, the land surface and the cryosphere. Forecast production and product dissemination to users is always time critical and forecast output data volumes already reach petabytes per week.

Meeting the future requirements for forecast reliability and timeliness needs 100-1000 times bigger highperformance computing and data management resources than today – towards what’s generally called ‘exascale’. To meet these needs, the weather and climate prediction community is undergoing one of its biggest revolutions since its foundation in the early 20th century.

This revolution encompasses a fundamental redesign of mathematical algorithms and numerical methods, the adaptation to new programming models, the implementation of dynamic and resilient workflows and the efficient post-processing and handling of big data. Due to these enormous computing and data challenges, artificial intelligence methods offer significant potential for gaining efficiency and for making optimal use of the generated information for European society.

About the speaker: Dr Peter Bauer is the Deputy Director of the Research Department at ECMWF in the UK and heads the ECMWF Scalability Programme. He obtained his PhD degree in meteorology from the University in Hamburg, Germany. During his career, he was awarded post-doctoral and research fellowships working at NOAA and NASA in the US and IPSL in France. He is an international fellow of the German Helmholtz Society. He led a research team on satellite meteorology at DLR in Germany before joining ECMWF in 2000. He has been a member of advisory committees for national weather services, the World Meteorological Organization and European space agencies. He is coordinating the FET-HPC projects ESCAPE and ESCAPE-2, and the recent ExtremeEarth proposal for European Flagships.

Location: Fortbildungszentrum Technik und Umwelt, KIT Campus Nord

Colloquium - The udocker tool

The udocker tool [1] is a container technology that is capable of executing standard Docker containers in user space. The tool itself can be deployed in the HPC infrastructure with minimal dependencies on other software or libraries.

The main features of the tool are that it implements a partial set of the Command Line Interface as Docker itself, it is able to pull Docker images from Dockerhub, create and run Docker containers, among other functionalities. It implements a set of execution modes (PRoot, RunC, Fakechroot and Singularity) and allows to select a mode for the container to execute CUDA applications in Nvidia GPUs in an automatic way. Official Docker images with CUDA from Nvidia or Tensorflow, can be executed by selecting this mode of operation, with no further modifications to the image or need to rebuild them. In this regard, a pre-built Docker image with a CUDA application, can be executed in any compute node with GPUs, and in this sense the image is portable. Furthermore, since the container is being executed with direct access to the device (no virtualization layer), there is no performance degradation.

About the speaker: Mario David is a research associate at LIP, the Laboratory of Instrumentation and Experimental Particle Physics. He holds a PhD in Experimental Particle Physics from the University of Lisbon, and worked in a numerous Distributed Computing Projects since 2002.

He is Member of “National Computing Distributed Infrastructure”, held a research associate position at the Institut de Physique du Globe de Paris (IPGP/CNRS) as Scientific Software Developer for the VERCE project, in particular in the data intensive use cases for seismology. Previously he held positions as Post-Doc and research associate at LIP participating in several FP6 and FP7 EU projects in FP5, FP6 and FP7.

Throughout those projects, he was actively involved in the Validation, Quality Assurance and testing of middleware, in regional and global operations. He has held coordination of the task Service Deployment and Validation in EGI.

[1] J. Gomes, I. Campos, E. Bagnaschi, M. David, L. Alves, J. Martins, J. Pina, A. López, P. Orviz, “Enabling rootless Linux Containers in multi-user environments: the udocker tool”, Computer Physics Communications, Volume 232, 2018.

Seminar - Deep learning, Jupyterlab und Containern auf HPC (z.b. FH2)

The DEEP Project is developing lightweight methods to utilise distributed computing resources (Cloud, HPC). The project makes use of Docker containers, enables their execution in a multi-user environment, supports direct GPU access, integrates well with modern AAI, offers DEEP-as-a-Service API, and supports the continuous integration and delivery approach.

The DEEP project successfully passed the mid-term review at the European Commission in Luxembourg and now starts the second half of the project.

In this seminar we will give an overview of the solutions being developed, and how they are used by DEEP users. We hope we can stimulate the adoption of DEEP components at SCC.

(Vortragssprache ist Englisch)

About the Speaker: Dr. Valentin Kozlov works in the department Data Analytics, Access and Applications (D3A) at KIT-SCC. He holds a Ph.D. in physics from Katholieke Universiteit Leuven (KULeuven, Belgium). The Ph.D. thesis was about computer simulations and building up a new experiment at ISOLDE, CERN. Prior to KIT-SCC he worked as a physicist at the Institute for Nuclear Physics (KIT-IKP) and actively participated in the analysis and planning of various experiments in fundamental physics.

Colloquium - Efficient Methodologies for Optimization and Control in Fluid Mechanics

At the beginning of the talk we discuss how to set up robust adjoint solvers for optimization and control in fluid mechanics in an automated fashion by the advanced use of algorithmic differentiation (AD). Then we discuss how to extend the resulting fixed-point solvers into so-called one-shot methods, where one solves the optimality system consisting of the primal, the dual/ adjoint and the design equations simultaneously. This is discussed for steady state cases first and then extended to unsteady partial differential equations (PDEs). Integrating existing solvers for unsteady PDEs into a simultaneous optimization method is challenging. Especially in the presence of chaotic and turbulent flow, solving the initial value problem simultaneously with the optimization problem often scales poorly with the time domain length. The new formulation relaxes the initial condition and instead solves a least squares problem for the discrete partial differential equations. This enables efficient one-shot optimization that is independent of the time domain length, even in the presence of chaos.

Referent: Prof. Nicolas R. Gauger hat an der TU Kaiserslautern den Lehrstuhl für Scientific Computing inne und ist Leiter des Regionalen Hochschulrechenzentrums Kaiserslautern (RHRK). Informationen zu Prof. Gaugers Lebenslauf und seinen Forschungsinteressen finden Sie hier: https://www.scicomp.uni-kl.de/team/gauger/cv-gauger/

Bericht von der Supercomputing Conference SC18

Zum Seminar: Die seit 1988 in den Vereinigten Staaten stattfindende International Conference for High Performance Computing, Networking, Storage and Analysis, von den Teilnehmern meist nur als Supercomputing oder kurz SC bezeichnet, ist die weltweit größte Konferenz für das Hoch- und Höchstleistungsrechnen. Die Veranstaltung teilt sich in eine wissenschaftliche Konferenz mit Hunderten von Einzelbeiträgen und einer Ausstellungsfläche für Industriepartner. Weitere wichtige Branchenereignisse, wie etwa die offizielle Veröffentlichung der Top500-Liste, finden im Umfeld der Konferenz statt. Passend zum 30. Geburtstag konnte die diesjährige SC einen neuen Besucherrekord vermelden und mehr als 13.000 Teilnehmer sowie mehr als 350 Aussteller anziehen.

Auch in diesem Jahr haben sich mehrere Vertreter des SCC auf den Weg ins texanische Dallas gemacht und werden von ihren Eindrücken berichten. Den Seminarteilnehmern soll ein umfassendes Bild über den aktuellen Stand der Technik und zu erwartende Entwicklungen, Fortschritte in der wissenschaftlichen Nutzung sowie zu nationalen und internationalen Strategien im Umfeld des Hoch- und Höchstleistungsrechnens vermittelt werden. Außerdem gibt einen Rückblick auf fast 30 Jahre Hochleistungsrechnen am KIT und dessen Vorgängerinstitutionen.

Referenten:

Dr. Hartwig Anzt leitet die Junior Research Group Fixed-Point Methods for Numerics at Exascale (FiNE). Sein Team beschäftigt sich mit der Entwicklung hochskalierender Algorithmen für zukünftige Parallelrechner mit einer deutlich höheren Prozessorzahl als bisher.

Bruno Hoeft koordiniert den Betrieb der Telekommunikationsnetze für Großforschungsprojekte wie z.B. BELLE-2.

Dr. Ivan Kondov leitet das SimLab NanoMikro und beschäftigt sich dort mit der Optimierung von Softwarepaketen und Algorithmen aus der Nanowissenschaft und Materialforschung für leistungsfähige Parallelrechner.

Simon Raffeiner arbeitet im Betrieb der Hochleistungrechner und vertritt das KIT unter anderem im Rahmen der Landesstrategie für High Performance Computing des Landes Baden-Württemberg (bwHPC).

Joint GridKa School and SCC-Colloquium ‑ Thirteen modern ways to fool the masses with performance results on parallel computers

In 1991, David H. Bailey published his insightful paper "Twelve Ways to Fool the Masses When Giving Performance Results on Parallel Computers." In that humorous article, Bailey pinpointed typical "evade and disguise" techniques that were used in many papers for presenting mediocre performance results in the best possible light.

After two decades, it's high time for an update. In 1991 the supercomputing landscape was governed by the "chicken vs. oxen" debate: The famous question "If you were plowing a field, which would you rather use? Two strong oxen or 1024 chickens?" is attributed to Seymour Cray, who couldn't have said it better. Cray's machines were certainly dominating in the oxen department, but competition from massively parallel systems like the Connection Machine was building up.

In the past two decades, hybrid, hierarchical systems, multi-core processors, accelerator technology, and the dominating presence of commodity hardware have reshaped the landscape of High Performance Computing. It's also not so much oxen vs. chickens anymore; ants have received more than their share of hype. However, some things never change, including the tendency to sugarcoat performance results that would never stand to scientific scrutiny if presented in a sound way.

My points (which I prefer to call "stunts") are derived from Bailey's original collection; some are identical or merely reformulated. Others are new, reflecting today's boundary conditions.

About the speaker: Georg Hager holds a PhD in Computational Physics from the University of Greifswald. He is a senior researcher in the HPC Services group at Erlangen Regional Computing Center (RRZE) at the Friedrich-Alexander University of Erlangen-Nuremberg and an associate lecturer at the Institute of Physics of the University of Greifswald. He authored and co-authored over 100 publications about architecture-specific optimization strategies for current microprocessors, performance engineering of scientific codes on chip and system levels, and special topics in shared memory and hybrid programming. His textbook "Introduction to High Performance Computing for Scientists and Engineers" is recommended or required reading in many HPC-related lectures and courses worldwide. In his teaching activities he puts a strong focus on performance modeling techniques that lead to a better understanding of the interaction of program code with the hardware. In 2011, he received the "Informatics Europe Curriculum Best Practices Award: Parallelism and Concurrency" together with Jan Treibig and Gerhard Wellein for outstanding achievements in teaching parallel computing.

Seminar - Vorstellung der Projekte CAMMP und Simulierte Welten

Zum Seminar: Mit Prof. Dr. Martin Frank und seinem Team ist ein neues Projekt für Schüler/innen an das SCC gekommen: Seit Januar 2018 gibt es CAMMP auch am KIT. CAMMP steht für Computational and Mathematical Modeling Program und will die gesellschaftliche Bedeutung von Mathematik und Simulationswissenschaften der Öffentlichkeit bewusst machen. Dazu steigen Schüler/innen in verschiedenen Veranstaltungsformaten gemeinsam mit Lehrer/innen aktiv in das Problemlösen mit Hilfe von mathematischer Modellierung und Computereinsatz ein. Dabei erforschen sie echte Probleme aus Alltag, Industrie oder Forschung um ihnen einen authentischen Einstieg in die mathematische Modellierung zu ermöglichen. Sowohl aufgrund der forschenden Arbeitsweisen in kleinen Teams als auch aufgrund der Authentizität der Probleme dient CAMMP der Berufs- und Studienorientierung, insbesondere für Studienfächer, die eine Ingenieurswissenschaft (z.B. Maschinenbau), Mathematik und Informatik miteinander verbinden (z.B. Computational Engineering Science (RWTH Aachen) oder Technomathematik (KIT)). CAMMP ist durch eine enge Zusammenarbeit zwischen (angehenden) Lehrkräften, Forschern der angewandten Mathematik und Simulationwissenschaften und Firmenvertretern geprägt.

Im Rahmen des SCC-Seminars stellen wir die verschiedenen Veranstaltungsformate von CAMMP vor und geben Ihnen zusätzlich einen Einblick in jeweils eine Problemstellung, die Schüler/innen innerhalb eines Modellierungstages oder einer Modellierungswoche bei uns angehen und lösen.

Referentinnen: Maren Hattebuhr und Kirsten Wohak studierten beide an der RWTH Aachen Mathematik und Physik auf Lehramt für Gymnasien und Gesamtschulen und promovieren zurzeit bei Prof. Dr. Martin Frank am KIT im Bereich der Mathematik Didaktik. Ihre Forschung zielt darauf ab, Schüler/innen mathematische Modellierung anhand realer Alltagsprobleme näher zu bringen, sowie Lehrer/innen in diesem Gebiet fortzubilden. Sie leiten zusammen das Projekt CAMMP am KIT, das sowohl mit dem Schülerlabor Mathematik als auch mit dem Projekt Simulierte Welten, SCC, kooperiert. Dabei können sie auf langjährige Erfahrung aus Aachen zurückgreifen.

Kolloquium - Projekte und Studienarbeiten der Forschungsgruppe Künstliche Intelligenz und Medienphilosophie (HfG Karlsruhe)

Die Forschungsgruppe Künstliche Intelligenz und Medienphilosophie (KIM), die 2018 an der Hochschule für Gestaltung Karlsruhe eingerichtet wurde, bietet Studierenden der Kunst, Philosophie und Informatik die Möglichkeit, sich über die Auswirkungen der Künstlichen Intelligenz und Medienphilosophie auf Gesellschaft und Kultur auszutauschen.

Im Kolloquium werden Projekte und Studienarbeiten vorgestellt. Interessierte sind herzlich eingeladen.

Mehr Informationen: http://kim.hfg-karlsruhe.de/events/presentation-of-kim-at-kit/

Referenten: Professor Matteo Pasquinelli und Studierende der Hochschule für Gestaltung.

Professor Matteo Pasquinelli ist Professor für Medienphilosophie an der Hochschule für Gestaltung, Karlsruhe, wo er die Arbeitsgruppe für kritische Maschinenintelligenz koordiniert. Er hat kürzlich die Anthologie Alleys of Your Mind herausgegeben: Augmented Intelligence and Its Traumas (Meson Press) und andere Bücher. Seine Forschung konzentriert sich auf die Schnittstelle von Kognitionswissenschaften, digitaler Ökonomie und maschineller Intelligenz. Für Verso Books bereitet er eine Monographie mit dem vorläufigen Titel Das Auge des Meisters vor: Kapital als Rechenleistung.

Kolloquium - Ausbildung zum Mathematisch-technischen Softwareentwickler (MATSE) an der RWTH-Aachen

Kurzporträt und eigene Erfahrungen mit der MATSE-Ausbildung

Die Ausbildung zum Mathematisch-technischen Softwareentwickler (MATSE), die auch mit einem dualen Studiengang kombiniert werden kann, ist eine ausgeglichene Kombination aus Mathematik und Informatik. Die Absolventen sind praktisch orientierte Fachkräfte im Bereich der Softwareentwicklung. Insbesondere in Kombination mit einem dualen Studiengang kann der MATSE aber auch einen guten Start in eine forschungsorientierte Akademikerkarriere darstellen. Im Vortrag stellen wir Ihnen den MATSE in Form eines Kurzportraits vor und berichten aus unseren eigenen Erfahrungen, welche Beiträge sowohl MATSE-Auszubildende als auch -Absolventen zur Lösung aktueller Herausforderungen technischer Hochschulen leisten (können).

Referenten: Matthias Müller, Benno Willemsen (IT-Center, RWTH Aachen University)

Prof. Dr. Matthias Müller ist seit 2013 Direktor des IT Centers und Universitätsprofessor für das Fach Hochleistungsrechnen der Fakultät für Mathematik, Informatik und Naturwissenschaften der RWTH Aachen University. Seine Forschungsschwerpunkte sind die automatische Fehleranalyse von parallelen Programmen, parallele Programmiermodelle, Performance Analyse und Energieeffizienz.

Benno Willemsen absolvierte den dualen Studiengang Technomathematik an der FH Aachen in Kombination mit der Berufsausbildung zum Mathematisch-technischen Assistenten sowie den konsekutiven Masterstudiengang gleichen Namens. Seit 2013 ist er Ausbildungsleiter für Mathematisch-technische Softwareentwickler (MATSE) am Standort RWTH Aachen University.

Joint GridKa School and SCC-Colloquium - The Quantum Way of Doing Computations

Since the mid-nineties of the 20th century, it became apparent that one of the centuries’ most important technological inventions, that is computers in general, and many of their applications can be further enhanced by using operations based on quantum physics. This is timely since the classical roadmap for the development of computational devices, commonly known as Moore’s law, will cease to be applicable within the next decade. This is due to the ever-smaller sizes of electronic components that soon will enter the quantum physics realm. Computations, whether they happen in our heads or with any computational device, always rely on real physical processes, which are data input, data representation in a memory, data manipulation using algorithms and finally, the data output. Building a quantum computer then requires the implementation of quantum bits (qubits) as storage sites for quantum information, quantum registers and quantum gates for data handling and processing and the development of quantum algorithms. In this talk, the basic functional principle of a quantum computer will be reviewed. It will be shown how strings of trapped ions can be used to build a quantum information processor and how basic computations can be performed using quantum techniques. Routes towards a scalable quantum computer will be discussed.

Since the mid-nineties of the 20th century, it became apparent that one of the centuries’ most important technological inventions, that is computers in general, and many of their applications can be further enhanced by using operations based on quantum physics. This is timely since the classical roadmap for the development of computational devices, commonly known as Moore’s law, will cease to be applicable within the next decade. This is due to the ever-smaller sizes of electronic components that soon will enter the quantum physics realm. Computations, whether they happen in our heads or with any computational device, always rely on real physical processes, which are data input, data representation in a memory, data manipulation using algorithms and finally, the data output. Building a quantum computer then requires the implementation of quantum bits (qubits) as storage sites for quantum information, quantum registers and quantum gates for data handling and processing and the development of quantum algorithms. In this talk, the basic functional principle of a quantum computer will be reviewed. It will be shown how strings of trapped ions can be used to build a quantum information processor and how basic computations can be performed using quantum techniques. Routes towards a scalable quantum computer will be discussed.

About the speaker: Rainer Blatt is Professor of experimental physics at the University of Innsbruck, Austria, and

Scientific Director at the Institute for Quantum Optics and Quantum Information (IQOQI) of the Austrian Academy of Sciences (ÖAW). He has carried out trail-blazing experiments in the fields of precision spectroscopy, quantum metrology and quantum information processing. In 2004 Blatt’s research group succeeded for the first time in transferring the quantum information of one atom in a totally controlled manner onto another atom (teleportation). Two years later, his group already managed to entangle up to eight atoms in a controlled manner. Creating such a first “quantum byte” was a further step on the way towards a quantum computer. He has received numerous awards for his achievements in the fields of quantum optics and meteorology. In 2012 the German Physical Society awarded him the Stern-Gerlach-Medaille. Together with Ignacio Cirac he won the 2009 Carl Zeiss Research Award. He also received a Humboldt Research Award (2013) and an ERC Advanced Grant by the European Research Council (2008). In 2013 the Australian Academy of Science announced Rainer Blatt as the 2013 Frew Fellow. In 2014 he was awarded the “Tiroler Landespreis für Wissenschaft 2014 and in 2015 the Stewart Bell Prize for Research on Fundamental Issues in Quantum Mechanics and their Applications.

Rainer Blatt is full member of the Austrian Academy of Sciences.

Colloquium - DoSSiER: Database of Scientific Simulation and Experimental Results

Software collaborations in high energy physics regularly perform validation and regression tests for simulation results. This is the case for the Geant4 collaboration and the developers of the GeantV R&D project both representing tool-kits for the simulation of radiation-matter interaction. It is also the case for GENIE a Monte Carlo event generator for neutrino interactions.

Software collaborations in high energy physics regularly perform validation and regression tests for simulation results. This is the case for the Geant4 collaboration and the developers of the GeantV R&D project both representing tool-kits for the simulation of radiation-matter interaction. It is also the case for GENIE a Monte Carlo event generator for neutrino interactions.

DoSSiER (Database of Scientific Simulation and Experimental Results) is being developed as a central repository to store the simulation results as well as the experimental data used for physics validation. DoSSiER can be easily accessed via a web application. In addition, a web service allows for programmatic access to the repository to extract records in json or xml exchange formats.

We will describe the functionality and the current status of various components of DoSSiER as well as the technology choices we made. One related project that makes use of the DoSSiER web service is an initiative by the Geant4 collaboration developing a framework which allows to systematically vary parameters of various Geant4 physics models. The results are then compared with experimental data retrieved from DoSSiER and can then be used to tune the parameters of the model or to estimate systematic uncertainties.

About the speaker: Dr. Hans Wenzel, Computer Professional am Fermi National Accelerator Laboratory (Fermilab), ist Spezialist für Simulationen von Teilchenphysik-Prozessen und Teilchendetektoren. Bereits als Doktorand der RWTH Aachen arbeitete er als Gastwissenschaftler am Fermilab. Nach seiner Promotion wechselte er zum Lawrence Berkeley Laboratory und danach an die Universität Karlsruhe. Sein Arbeitsmittelpunkt lag aber weiterhin am Fermilab. Seit 2000 ist Hans Wenzel als Computer Professional am Fermilab beschäftigt und arbeitete dort für verschiedene Experimente und Projekte, u.a. für das CMS-Experiment und das ILC-Projekt.

The talk will be held in English.

Kolloquium - Einsatz operativer Planungs- und Optimierungsmodelle im Energiehandel

Die Geschäftseinheit Handel als Energiedrehscheibe und Risikomanager im EnBW-Konzern hat die Aufgabe sämtliche Marktrisiken des Konzerns, insbesondere Mengen- und Preisrisiken, zu steuern und die Wertschöpfungskette des Konzerns zu optimieren. Für die täglichen operativen Analysen und Bewertungen der Energiemärkte (Strom, Kohle, Gas, Öl, CO2), der Marktentwicklungen und der Asset-Portfoliobewirtschaftung werden in den Fachabteilungen des Handels leistungsfähige Rechencluster (HPC) und mathematische Planungs-/Optimierungswerkzeuge aus dem Umfeld des Operations Research sowie der Finanzmathematik entwickelt und eingesetzt. Dazu zählen bspw. Planungsmodelle für den optimalen Einsatz des Kraftwerksparks für unterschiedliche Planungshorizonte (Spot-, Termin-, Regelenergiemärkte), Marktmodelle für die Hedge-Steuerung sowie die Bewertung von Assets und Handelsprodukten. Insofern ist der Einsatz der Modellwerkzeuge für den Handel von zentraler Bedeutung, da es vitale Prozesse für die Positionsführung des Konzerns und das integrierte Risikomanagement maßgeblich unterstützt. Im Vortrag werden die Herausforderungen im Energiehandel im täglichen operativen Einsatz der Planungs/-Optimierungswerkzeuge adressiert und aufgezeigt.

Die Geschäftseinheit Handel als Energiedrehscheibe und Risikomanager im EnBW-Konzern hat die Aufgabe sämtliche Marktrisiken des Konzerns, insbesondere Mengen- und Preisrisiken, zu steuern und die Wertschöpfungskette des Konzerns zu optimieren. Für die täglichen operativen Analysen und Bewertungen der Energiemärkte (Strom, Kohle, Gas, Öl, CO2), der Marktentwicklungen und der Asset-Portfoliobewirtschaftung werden in den Fachabteilungen des Handels leistungsfähige Rechencluster (HPC) und mathematische Planungs-/Optimierungswerkzeuge aus dem Umfeld des Operations Research sowie der Finanzmathematik entwickelt und eingesetzt. Dazu zählen bspw. Planungsmodelle für den optimalen Einsatz des Kraftwerksparks für unterschiedliche Planungshorizonte (Spot-, Termin-, Regelenergiemärkte), Marktmodelle für die Hedge-Steuerung sowie die Bewertung von Assets und Handelsprodukten. Insofern ist der Einsatz der Modellwerkzeuge für den Handel von zentraler Bedeutung, da es vitale Prozesse für die Positionsführung des Konzerns und das integrierte Risikomanagement maßgeblich unterstützt. Im Vortrag werden die Herausforderungen im Energiehandel im täglichen operativen Einsatz der Planungs/-Optimierungswerkzeuge adressiert und aufgezeigt.

Über den Sprecher: Dr.-Ing. Andjelko Celan ist Konzernexperte ‘Model Based Solutions’ bei der EnBW Energie Baden-Württemberg AG, Karlsruhe und leitet das Marktdatenmanagement in der Geschäftseinheit Handel. Die Aufgabenschwerpunkte umfassen die Konzeption, Entwicklung und Implementierung geschäftskritischer IT-Lösungen für den Energiehandel mit Fokus auf den Einsatz speicherplatz- und rechenzeitintensiver Analyse-, Planungs- und Optimierungsmodelle, techn./wissenschaftlicher Applikationen und HPC-Infrastrukturen. Hierbei berät und unterstützt er die Front-/Backoffice-Bereiche des Handels in der Anwendung der Modellsysteme im Kontext energiewirtschaftlicher, gesetzlich-regulatorischer und informationstechnischer Rahmenbedingungen. Hierzu gehören u.a. auch die Wasserhaushalts-/Wärmemodelle für das Krisenmanagement Hitze/Niedrigwasser zur Einhaltung wasserrechtlicher Konzessionsvorgaben im Kraftwerksbetrieb, deren Einsatz er in Krisensituationen koordiniert. In Zusammenarbeit und in Projekten mit Forschungseinrichtungen, Universitäten und Industriepartnern verfolgt Dr. Celan aktuelle Forschungsthemen im HPC-Umfeld und prüft deren Anwendbarkeit auf EnBW-relevante Fragestellungen.

Dr. Celan hat an der Universität Karlsruhe (TH) den Studiengang Diplom-Informatik absolviert und an der Fakultät für Bauingenieur- und Vermessungswesen im Umfeld Modellierung und numerische Simulation in der Energiewasserwirtschaft promoviert.

Colloquium - Increase of resolution in Computational Fluid Dynamics through the combination of grid free and grid based methods.

Gitterbasierte Methoden sind die meistverbreiteten Methoden in der Numerischen Strömungsmechanik. Die Genauigkeit dieser Methoden ist durch die numerische Diffusion beschränkt. Um dieses Problem zu umgehen, verwendet man sehr feine numerische Gitter mit Millionen von Gitterpunkten. Dabei, bedingt durch die Stabilität, wird auch der Zeitschritt entsprechend klein.

Gitterbasierte Methoden sind die meistverbreiteten Methoden in der Numerischen Strömungsmechanik. Die Genauigkeit dieser Methoden ist durch die numerische Diffusion beschränkt. Um dieses Problem zu umgehen, verwendet man sehr feine numerische Gitter mit Millionen von Gitterpunkten. Dabei, bedingt durch die Stabilität, wird auch der Zeitschritt entsprechend klein.

Gitterfreie Methoden, die in diesem Vortrag vorgestellt werden, basieren auf den Eigenschaften von Wirbelpartikeln. Diese erlauben viel größere Zeitschritte und eine höhere Genauigkeit. Eine Herausforderung ist hier die gleichmäßige Partikelverteilung und die MPI-basierte Kommunikation.

Im Vortrag geht es um neue Ansätze für die Kopplung und Interaktion beider Methoden und die daraus resultierenden Vorteile und Herausforderungen für die eingesetzte Numerik.

Über den Sprecher: Prof. Nikolai Kornev studierte und promovierte an der Universität für Meerestechnik St. Petersburg. Später wurde er dort auch Professor und Lehrstuhlinhaber. Seit 2010 leitet er den Lehrstuhl für Modellierung und Simulation an der Universität Rostock, Fakultät für Maschinenbau und Schiffstechnik.

Seine Forschungsschwerpunkte sind Numerische Thermodynamik und Strömungsmechanik, Wärme- und Stoffübertragung, Mischung, Turbulenztheorie und Schiffsströmungsmechanik. Er ist Autor von Büchern über Propellertheorie und Schiffstheorie, Hydromechanik, Einführung in die Large Eddy Simulation und Experimentelle Hydromechanik.

Der Vortrag wird auf Deutsch gehalten die Folien sind auf Englisch.

Colloquium - Advanced networking for data intensive science using SDN/SDX/SDI and related technologies

Software-Defined-Networking (SDN) continues to transform all levels of networking, from services to foundation infrastructure. Programmable networking using SDN leverages many advanced virtualization techniques, which enable the implementation of significantly higher levels of abstraction for network control and management functions at all layers and across all underlying technologies. These approaches allow network designers to create a much wider range of services and capability than can be provided with traditional networks, including highly customized networks. Given the proliferation of SDNs, the need for SDN exchanges has been recognized (SDX). With funding from the National Science Foundation (NSF) through the International Research Network Connections (IRNC) the StarLight International/National Communications Exchange Facility is designing and implementing an international SDX. This SDX is demonstrating the potential for such next generation exchanges to significantly transform existing exchanges by enabling new types of services and technologies. Because of the virtualization characteristics of an SDX, it is possible to use them to create sub-sets of virtual exchanges customized for specific applications. In addition, this concept can be extended beyond the network to include additional infrastructure resources, including compute resources, storage, sensors, instruments, and much more, essentially creating capabilities for Software Defined Infrastructure (SDI).

About the speaker: Joe Mambretti is Director of the International Center for Advanced Internet Research at Northwestern University, which is developing digital communications for the 21st Century. The Center, which was created in partnership with a number of major high tech corporations (www.icair.org ![]() ), designs and implements large scale services and infrastructure for data intensive applications (metro, regional, national, and global).

), designs and implements large scale services and infrastructure for data intensive applications (metro, regional, national, and global).

He is also Director of the Metropolitan Research and Education Network (MREN), an advanced high-performance network interlinking organization providing services in seven upper-midwest states. With its research partners, iCAIR has established multiple major national and international network research testbeds, which are used to develop new architecture and technology for dynamically provisioned communication services and networks, including those based on lightpath switching.

He is also Co-Director of the StarLight International/National Communications Exchange Facility in Chicago (www.startap.net/starlight ![]() ), based on leading-edge optical technologies, a PI of the International Global Environment for Network Innovations (iGENI)., funded by the National Science Foundation (NSF) through the GPO, the PI of StarWave, a multi-100 Gbps communications exchange facility.

), based on leading-edge optical technologies, a PI of the International Global Environment for Network Innovations (iGENI)., funded by the National Science Foundation (NSF) through the GPO, the PI of StarWave, a multi-100 Gbps communications exchange facility.

Colloquium (Evening Lecture GridKa School) - Automated and Connected Driving from a User-Centric Viewpoint

Automated and connected driving can be seen as a paradigm shift in the automotive domain which will change the today’s role of the human driver substantially. More and more parts of the driving task are to be taken over by technical components for different scenarios. The application areas reach from fully automated parking up to automated driving on a highway with different involvements of the driver in monitoring tasks (SAE level 2 to 5). Thus, it is essential to understand how this role change will influence the human driver and how we can support him by designing an easy to understand, safe and comfortable interaction with the automated vehicle. From Human Factors research we know that it is crucial to ensure that the driver has a correct mental model about the automation levels and its current status to avoid mode confusion and resulting errors in operation. In addition, transitions of control between the driver and the automated vehicle need a careful design that is adjusted to the current traffic situation and the driver status. For example, mobile devices that the driver uses during the automated driving can be integrated smoothly in the overall interaction design concept to support transitions. The talk will give an overview on selected research topics in the area of user-centric development of automated and connected vehicles at DLR. It will underline why it is important to put the human into focus when designing a new, exciting technology such as automated and connected vehicles.

Automated and connected driving can be seen as a paradigm shift in the automotive domain which will change the today’s role of the human driver substantially. More and more parts of the driving task are to be taken over by technical components for different scenarios. The application areas reach from fully automated parking up to automated driving on a highway with different involvements of the driver in monitoring tasks (SAE level 2 to 5). Thus, it is essential to understand how this role change will influence the human driver and how we can support him by designing an easy to understand, safe and comfortable interaction with the automated vehicle. From Human Factors research we know that it is crucial to ensure that the driver has a correct mental model about the automation levels and its current status to avoid mode confusion and resulting errors in operation. In addition, transitions of control between the driver and the automated vehicle need a careful design that is adjusted to the current traffic situation and the driver status. For example, mobile devices that the driver uses during the automated driving can be integrated smoothly in the overall interaction design concept to support transitions. The talk will give an overview on selected research topics in the area of user-centric development of automated and connected vehicles at DLR. It will underline why it is important to put the human into focus when designing a new, exciting technology such as automated and connected vehicles.

About the Speaker: Prof. Dr. Frank Köster is currently head of the "Automotive Systems" department at DLR (Deutsches Zentrum für Luft- und Raumfahrt) and Professor for "Intelligent Transport Systems" at the University of Oldenburg. He studied computer science at the University of Oldenburg where he graduated in 2001 and habilitated in 2007. Frank Köster is a member of the round table "Autonomous Driving" of the Federal Ministry of Transport and Digital Infrastructure, representative of the DLR in various national and international committees and organizations like SafeTrans and ERTICO as well as referee of research projects, journals and congresses concerning autonomous and connected driving.

Colloquium - On Applications and Approaches to Exploiting the Exascale Computing Potential

With major supercomputing investments on the horizon in the US and other countries, a major focus of the day is the identification of visionary scaling needs in applications and technical approaches to enable massive scaling. In this seminar, some opportunities will be described in exploring applications such as electric grid and IoT simulations, and applying approaches such as discrete event methods and reversible computing. Modeling techniques and performance data will be presented to highlight the dramatic gains possible in some cases when reliance is moved from memory to computation.

With major supercomputing investments on the horizon in the US and other countries, a major focus of the day is the identification of visionary scaling needs in applications and technical approaches to enable massive scaling. In this seminar, some opportunities will be described in exploring applications such as electric grid and IoT simulations, and applying approaches such as discrete event methods and reversible computing. Modeling techniques and performance data will be presented to highlight the dramatic gains possible in some cases when reliance is moved from memory to computation.

About speaker: Kalyan S. Perumalla is a Distinguished R&D Staff Member and Group Leader of Discrete Computing Systems in the Computational Sciences and Engineering Division at the Oak Ridge National Laboratory. He is also as an adjunct professor in the School of Computational Sciences and Engineering at the Georgia Institute of Technology.

Seminar - Simulieren der turbulenten Flammenausbreitung anhand Hochleistungsrechner

Wie wissenschaftliche Fragen, z.B. warum verdickt plötzlich diese Flamme, Modellierungsansätze und Algorithmenentwicklung ins Leben rufen und wie diese Schritt für Schritt für den Supercomputer/ Hochleistungsrechner(HPC) angepasst werden.

Wie wissenschaftliche Fragen, z.B. warum verdickt plötzlich diese Flamme, Modellierungsansätze und Algorithmenentwicklung ins Leben rufen und wie diese Schritt für Schritt für den Supercomputer/ Hochleistungsrechner(HPC) angepasst werden.

- Verbrennungssimulation - wie unterschiedliche Flammen mit Einzelwirbel oder Turbulenz interagieren

- Einflussgrößen (Krümmung, Dehnung, Lewiszahl) "rein" untersuchen

- Fehlt ein Parameter im Borghi-Diagramm?

IT Fragen:

- Wie nützlich ist HPC für meine Wissenschaft - kann ich wirklich schnell wissenschaftliche Fragen beantworten?

- Supercomputingbedarf für Strömungssimulationen allgemein Supercomputingbedarf für Strömungen mit chemischen Reaktionen

- Wie nutze ich den Supercomputer (in)effizient? - Erfahrungen mit Compileroptionen, I/O, Support-tickets und Wiki-Seiten

Jordan Denev aus SimLab Energy betreut im Rahmen von bwHPC und im SimLab Energie Anwendungen aus den Ingenieurswissenschaften, insbesondere Strömungssimulationen. Herr Denev hat in Sofia Maschinenbau studiert und über Strömungsmechanik promoviert. Er verfügt über langjährige Forschungserfahrung auf dem Gebiet der der numerischen Modellierung und Simulation von Strömungen in der Kombination mit komplexen Phänomenen wie Turbulenz, Wärme- und Stofftransport, Wärmestrahlung und chemische Reaktionen. Zuletzt war er im KIT am Engler-Bunte-Institut bzw. bei der DVGW-Forschungsstelle am EBI tätig.

Colloquium - Heterogeneous Programming with OpenMP 4.5

In this presentation, we will review OpenMP 4.0 features for heterogeneous programming with focus on the Intel Xeon Phi Coprocessor. After short recap of the OpenMP programming model for multi-threading, we will deep dive into the features of OpenMP 4.0 for offloading computation to coprocessor devices. The features discussed include target constructs, teams constructs, and distribute constructs. We will review the additions made to OpenMP 4.5 for asynchronous offloading and synchronization of offloaded execution. The presentation will close with a case study of porting the coupled-cluster method of NWChem to use the Intel Xeon Phi coprocessor with the OpenMP 4.x features presented before. The ported code ran on 60.000 heterogeneous cores, delivering about 2x speed-up over the Xeon-only execution.

Dr. Michael Klemm is part of Intel's Software and Services Group, Developer Relations Division. His focus is on High Performance and Throughput Computing. Michael's areas of interest include compiler construction, design of programming languages, parallel programming, and performance analysis and tuning. Michael is Intel representative in the OpenMP Language Committee and leads the efforts to develop error handling features for OpenMP. Michael is the project lead and the maintainer of the pyMIC offload module for Python.

Kolloquium (gemeinsam mit IKP) - All sky search for gravitational waves using supercomputing systems

Gravitational waves are the last prediction of general relativity theory of Albert Einstein still awaiting a direct experimental verification. Observations of gravitational waves will open a new field - gravitational wave astronomy, providing unique information concerning exotic objects like binary systems of compact objects, black holes, supernovae explosions, rotating neutron stars, as well about the earliest stages of the evolution of our Universe.

First science data from the global network of advanced gravitational wave detectors - LIGO, GE0600 and Virgo long arm interferometers, just started to be collected and will continue for several years. Searching for sources in noisy data is algorithmically challenging, since one has to simultaneously predict and check for different possible types of signals, which is computationally demanding, due to the large volume of the parameter space, describing the source and its position over which the searches must be carried out.

Recently two members of Virgo Collaboration Andrzej Krolak and Michal Bejger have won a DAAD Fellowships to work as a Visiting Scientist at SCC in joint R&D project with HPC experts of SimLab EA Particles lead by Gevorg Poghosyan. The aim is to develop a new hybrid parallel version of the data analysis toolkit and a workflow for efficiently performing gravitational wave searches on supercomputing systems.

Speakers: Andrzej Królak is a Professor at Institute of Mathematics of Polish Academy of Science. His research field is gravitation and general theory of relativity studying especially the phenomena predicted by this theory like black holes and gravitational waves. His present scientific work is focused on theoretical and practical aspects of analysis of data from gravitational-wave detectors. He is a member of the board of the Virgo gravitational wave detector project, leader of the Polish members of Virgo Collaboration and Co-Chairman of the joint LIGO Scientific Collaboration and Virgo Collaboration group that searches for gravitational radiation from spinning neutron stars. Prof. Krolak has spent a number of years abroad as a visitor, among others in Max Planck Institute for Gravitational Physics in Potsdam and Hannover and in Jet Propulsion Laboratory in Pasadena, California.

Michał Bejger is an astrophysicist working at the Nicolaus Copernicus Astronomical Center in Warsaw, Poland, where he obtained his PhD. In the past he was a Marie Curie Fellow post-doc in Observatoire de Paris and recently, a visiting astronomer. His is studying the interiors of neutron stars, vicinity of black holes horizons, and using numerical general relativity as a tool to describe relativistic compact objects and gravitational waves. Currently he is working within the LIGO-Virgo collaboration, which aims at a direct detection of gravitational waves with the kilometer-size laser interferometers, on the data analysis of periodic gravitational waves emitted by rotating neutron stars and their astrophysical models. He is also co-developing a free and open-source differential geometry and tensor calculus package for symbolic calculations in general relativity, SageManifolds. Michal is an editor in a Polish monthly magazine Delta aimed at gifted high-school and undergraduate students interested in computer science, mathematics, physics and astronomy.

Slides about Gravitational Waves and supercomputing application developed in SCC

Seminar - bwCloud: Cross-site server virtualization

The bwCloud motivation is to build a prototype of a Cloud-Infrastructure, based on universities and research institutes in Baden-Württemberg. Main project’s goals are to develop an implementable and elastic approach to organize federated virtualization of servers and services across different sites.

Speaker: Oleg Dulov is Member of bwCloud Project & SCC Mailhostteam. He have studied at Volgograd State University, Applied Mathematics and at Hochschule Offenburg, where he got Master in Communication and Media Engineering. He worked at Hochschule Offenburg, department Mechanical & Process Engineering and since 2008 at KIT SCC in projects: D-Grid, GGUS

Kolloquium - The questions of equation of states based on thermal evolution of stars due to neutrino radiation

The quark - hadron phase transition at extreme high densities is one of the interesting questions in nuclear- and astrophysics. Such high densities assumed to appear in the cores of compact stars, after supernovae explosions or binary mergers. If it is possible, would it lead to smooth crossover or rather jump like phase transition? The answer for this question is relevant especially for the problem of existence of a critical endpoint in the quantum chromodynamics (QCD) phase diagram. To find the most realistic model equation of state we simulate mechanical and thermal evolution of compact stellar objects and compare with observational data by Bayesian analysis method. To do this at high precision and relevant scientific complexity one needs huge amount of computation using massively parallel systems.

Speaker: Hovik Grigorian is senior researcher at IT Laboratory of JINR of Russian Academy of Sciences and assistant professor of theoretical and computational physics at Yerevan State University. He was a long term Visiting Scientist at University Rostock. Since 2011 he is Armenian representative of Research Network "CompStar". Main research interests of Hovik Grigorian includes general relativity and alternative theories of gravitation, quasi-stationary and dynamic stellar evolutions, nuclear matter at extreme conditions and he is the author of ca 100 publications and book chapters and more than 1500 citations.

Seminar - To Cool or Not to Cool? Energy Efficiency from an HPC-Operator's View

Power consumption and energy efficiency in HPC has numerous facets: They are influenced by a large variety of different hardware like servers, network, storage, power supplies, fans, and many more as well as operating aspects like e.g. scheduling and the way how algorithms and programs are designed and written. In addition it is extremely important how we prevent all the system components from overheating. Nowadays, there is a vast number of ways and ideas about how such large systems can be kept cool enough without waisting too much additional electrical power for this purpose. From an HPC-operator's view this is indeed a very 'hot' topic, since energy efficiency can be greatly enhanced if an optimal method for cooling is chosen. This talk is intended to be a survey lecture on the great variety of methods and ideas for cooling large HPC-systems. We will dicuss these methods and point out their pros and cons in the light of energy Efficiency.

Rudolf Lohner is a Professor of Mathematics at the Scientific Computing Center (SCC) and the Department of Mathematics of the Karlsruhe Institute of Technology (KIT) in Germany. In 2001 he joined the Scientific Supercomputing Centre of Karlsruhe University, the predecessors and founder members of SCC and KIT, where he is now Head of the Department of Scientific Computing and Applications. Prof. Lohner is President of HP-CAST - the worldwide user group of technical HPC users of HP systems. His main research interests include applied mathematics, self-verifying numerical algorithms, scientific supercomputing, and grid computing.

Seminar - Informationsveranstaltung Datenschutz für das SCC

-Zugriffsberechtigungen auf Daten, z.B. Personenbezogene Daten dürfen nur verarbeitet werden, wenn eine Rechtsgrundlage oder die Einwilligung der/des Betroffenen es erlauben,

-Prozedere beim Aufbau vom Diensten im SCC für das KIT im Hinblick auf die Verarbeitung personenbezogener Daten sowie ggf. Auftragsdatenverarbeitung.

Kolloquium - The Data-enabled Revolution in Science and Society: A Need for National Data Services and Policy

Edward Seidel NCSA director Edward Seidel is a distinguished researcher in high-performance computing and relativity and astrophysics with an outstanding track record as an administrator. In addition to leading NCSA, he is also a Founder Professor in the University of Illinois Department of Physics and a professor in the Department of Astronomy. His previous leadership roles include serving as the senior vice president for research and innovation at the Skolkovo Institute of Science and Technology in Moscow, directing the Office of Cyberinfrastructure and serving as assistant director for Mathematical and Physical Sciences at the U.S. National Science Foundation, and leading the Center for Computation & Technology at Louisiana State University. He also led the numerical relativity group at the Max Planck Institute for Gravitational Physics (Albert Einstein Institute) in Germany. Seidel is a fellow of the American Physical Society and of the American Association for the Advancement of Science, as well as a member of the Institute of Electrical and Electronics Engineers and the Society for Industrial and Applied Mathematics. His research has been recognized by a number of awards, including the 2006 IEEE Sidney Fernbach Award, the 2001 Gordon Bell Prize, and 1998 Heinz-Billing-Award. He earned a master’s degree in physics at the University of Pennsylvania in 1983 and a doctorate in relativistic astrophysics at Yale University in 1988.

Kolloquium (gemeinsam mit GridKa) - Robotics & Artificial Intelligence

In recent years robotics has gained a lot of interest also in the area of artificial intelligence. While systems for a long time have been used as tools to implement classical AI approaches in the area of object recognition, environment representation, path and motion planning etc., researchers now begin to understand that the system (robot) itself is part of the question and has to be taken into account when teaching AI questions. This talk might survey the state of the art in robotics and outline ways to tackle the question of AI in the light of the systems as

an integral part of the approach. Future milestones an key achievements will be discussed as a proposal to tackle the big question of AI.

Frank Kirchner, studied Computer Science and Neurobiology at the University Bonn from 1989 until 1994, where he received his Diploma (Dipl. Inf.) in 1994 and graduated (Dr. rer. nat.) in Computer Science in 1999. Since 1994 he was Senior Scientist at the ‘Gesellschaft für Mathematik und Datenverarbeitung’ (GMD) in Sankt Augustin, which in 1999 became part of the Fraunhofer Society. In this position, Dr. Kirchner was the head of a team focusing on cognitive and bioinspired robotics. Since 1998 he was also a Senior Scientist at the Department of Electrical Engineering at Northeastern University in Boston, MA, USA, where he was setting up a branch of the Fraunhofer Institute for Autonomous Intelligent Systems (FHG-AIS). In 1999, Dr. Kirchner was appointed ‚Tenure Track Assistant Professor‘ at the Northeastern University. In 2002 Dr. Kirchner was appointed a ‚Full Professor‘ at the University of Bremen and is heading the Chair of Robotics at the faculty of Computer Science and Mathematics at the University of Bremen since then. Since December 2005, Dr. Kirchner is also Director of the Robotics Innovation Center (RIC) and at the same time speaker of the Bremen location of the German Center for Artificial Intelligence (DFKI). Prof. Kirchner regularly serves as a reviewer/editor for a series of international scientific journals, conferences and institutions and is author/co-author of more than 150 publications in the field of Robotics and AI.

Kolloquium - The XSEDE Global Federated File System - Breaking Down Barriers to Secure Resource Sharing

The GFFS offers scientists a simplified means through which they can interact with and share resources. Currently, many scientists struggle to exploit distributed infrastructures because they are complex, unreliable, and require the use of unfamiliar tools. For many scientists, such obstacles interfere with their research; for others, these obstacles render their research impossible. It is therefore essential to lower the barriers to using distributed infrastructures.

The first principle of the GFFS is simplicity. Every researcher is familiar with the directory-based paradigm of interaction; the GFFS exploits this familiarity by providing a global shared namespace. The namespace appears to the user as files and directories so that the scientist can easily organize and interact with a variety of resources types. Resources can include compute clusters, running jobs, directory trees in local file systems, groups, as well as storage resources at geographically dispersed locations. Once mapped into the shared namespace, resources can be accessed by existing applications in a location-transparent fashion, i.e., as if they were local.

In this talk I will present the GFFS, its functionality, its motivation, as well as typical use cases. I will demonstrate many of its capabilities, including:

1) how to securely share data, storage, and computational resources with collaborators;

2) how to access data at the centers from campus and vice versa;

3) how to create shared compute queues with collaborators; and

4) how to create jobs and interact with them once started.

Andrew Grimshaw received his Ph.D. from the University of Illinois at Urbana-Champaign in 1988. He then joined the University of Virginia as an Assistant Professor of Computer Science, and became Associate Professor in 1994 and Professor in 1999. He is the chief designer and architect of Mentat and Legion. In 1999 he co-founded Avaki Corporation, and served as its Chairman and Chief Technical Officer, until 2005 when Avaki was acquired by Sybase. In 2003 he won the Frost and Sullivan Technology Innovation Award. Andrew is a member of the Global Grid Forum (GGR) Steering Committee and the Architecture Area Director in the GGF. He has served on the National Partnership for Advanced Computational Infrastructure (NPACI) Executive Committee, the DoD MSRC Programming Environments and Training (PET) Executive Committee, the CESDIS Science Council, the NRC Review Panel for Information Technology, and the Board on Assessment of NIST Programs. He is the author or co-author of over 50 publications and book chapters.

Kolloquium - Angewandte Mathematik am Fraunhofer Institut SCAI – zwischen Forschung und Entwicklung

Mathematik ist nicht nur ein Thema, mit dem sich akademische Einrichtungen beschäftigen. Vor allem im Finanz- und Versicherungswesen spielt Mathematik ganz offensichtlich eine bedeutende Rolle. Vor allem Methoden aus der numerischen Mathematik und der Optimierung sind es, die unser tägliches Leben beeinflussen und damit allgegenwärtig sind. Waren es zunächst nur einfache Lehrbuchmethoden, die zur Entwicklung von innovativen Produkten notwendig waren, wird heute immer mehr auf Simulationen und aufwendige Berechnungen zurückgegriffen. Es gilt, kostengünstig innovative Produktideen in immer kürzerer Zeit zur Marktreife zu bringen. Die Palette der Beispiele reicht hier von Entwicklung einer Dichtung für Autotüren, über die Optimierung von Schnittmustern bis hin zur essentiellen Unterstützung der Suche nach Bodenschätzen.

Anhand von Beispielen aus der täglichen Arbeit am Fraunhofer SCAI werde ich zeigen, welche interessanten Fragestellungen sich in der Zusammenarbeit mit industriellen Partnern ergeben können und wie sie mit Hilfe von Mathematik gelöst werden. Mathematik und speziell numerische Mathematik führt natürlich nur zu den hervorragenden Ergebnissen, wenn die Algorithmen entsprechend gut auch implementiert werden. Auch hier werde ich zeigen, wie Fraunhofer SCAI einen Partner bei der Beschleunigung seiner Rechenaufgabe unterstützen konnte. In diesem Zusammenhang werde ich auch noch kurz auf die Arbeiten der HPC-Gruppe in öffentlich geförderten Projekten eingehen.

Dr. Thomas Soddemann leitet seit 2008 die HPC Aktivitäten am Fraunhofer Institut für wissenschaftliches Rechnen und Algorithmen. Er studierte theoretische Physik an den Universitäten Paderborn, Freiburg (Diplom) und Mainz (Promotion, mit Arbeit am Max-Planck-Institut für Polymerforschung). Seine Postdoc-Zeit verbrachte er in den USA an der Johns-Hopkins-University in Baltimore, MD und bei den Sandia National Laboratories in Albuquerque, NM bevor er zum Rechenzentrum der Max-Planck-Gesellschaft für Anwendungsberatung auf HPC-Systemen wechselte.

Kolloquium - ADEI: Intelligent visualization and management of time-series data in scientific experiments

Huge quantities of information are produced by scientific experiments worldwide. Data formats, underlying storage engines, and sampling rates are varying significantly. The components of large international experiments are often located in multiple, sometimes hardly accessible places, and are developed by only loosely connected research groups. To handle the distributed and heterogeneous nature of modern experiments, a complex data management systems are required. While senior experimentalists are focused on the technical challenges of the physics, the data management is normally done by PhD students along with analysis and simulation tasks. At IPE, we have developed ADEI (Advanced Data Management Infrastructure) platform to provide uniform access to the time series data for multiple experiments. ADEI has a modular architecture. A backend running on the server analysis incoming data and generates caches required to show preview charts quickly. Using interface guide-lines set by Google-maps, ADEI web interface provides visual data browser with extensible search capabilities and export in multiple data formats. Finally, there is web service interface between backend and web interface which may be used by 3rd party applications to get standardized access to the data from different subsystems. Using this interface, we have integrated ADEI with ROOT and National Instruments Diadem Insight. The system is currently running at several sites in KIT and Armenia and manages meteorological, cosmic ray, and slow control data. Having a data management platform supporting multiple experiments is not only save work power leading to more elaborate system, but will also simplify analysis and comparison of newly obtained data with archives of related experiments which were completed earlier or are still running by other collaborations. We will present the features of the ADEI platform and discuss the current focus of development.

Suren Chilingaryan is a data processing expert at the Institute for Data Processing and Electronics at the Karlsruhe Institute of Technology. His research interests include data management systems for large scientific experiments, GPU-accelerated on-line monitoring systems and software optimizations. He graduated in mathematics from Moscow State University and was awarded a doctoral degree in Computer Science from Armenian National Academy of Sciences

Seminar - Simulierte Welten –Simulation und Hochleistungsrechnen aus einer anderen Perspektive

Die moderne Welt ist ohne Computergestützte Simulationen kaum mehr vorstellbar. Insbesondere große technische Entwicklung kommen heutzutage nicht mehr ohne die Nutzung von Techniken des Hochleistungsrechnens aus: Autos und Flugzeuge können mit Hilfe von Simulationen Ressourcen sparend entwickelt werden, die Wirkung neuer Medikamente wird am Computer modelliert und getestet, Wettervorhersagen werden aufgrund von komplexen Modellen verlässlicher. Das Thema Simulation spielt oft unbemerkt eine wichtige Rolle auch in den Bereichen Wirtschaftsprognosen und Entscheidungen in der Politik. Diese Herausforderungen der Gegenwart verlangen nach immer größerer Rechenkraft, um gute Ergebnisse zu erzielen. So stützen sich sogar langfristige Entscheidungen und Risikoanalysen auf Simulationen und rechenintensive Analysen großer Datensätze, auch als Begriff „Big Data“ in aller Munde. Zukünftige Generation, also unsere Schülerinnen und Schüler, sind davon voraussichtlich noch mehr betroffen als wir heute. Deswegen ist es unser Ziel bereits in der Schule das Interesse für das Thema Simulation zu wecken und die Kompetenzen dafür zu schulen. Welche Konzepte dafür geeignet sind und wie wir Schülerinnen und Schüler einen Einblick in die Wissenschaft ermöglichen, hören Sie in diesem Vortrag.

Thomas Gärtner ist wissenschaftlicher Mitarbeiter am Scientific Computing Center (SCC) des Karlsruher Instituts für Technologie (KIT). Er ist verantwortlich für den Betrieb des KIT-weit eingesetzten Lernmanagementsystems ILIAS und dessen Integration in die KIT IT-Infrastruktur. Im Projekt „Simulierte Welten“ vertritt er die wissenschaftliche Seite und ist er u.a. für die Projektkoordination verantwortlich. Als Experte für E-Learning, neue Medien und Informationssysteme ist er für die Bereitstellung und qualitative Weiterentwicklung der Online-Lehr- und Lerninhalte, die im Rahmen des Projekts entwickeln werden, zuständig.

Kolloquium - Breaking the power wall in Exacale Computing What is Exascale computing?

Roughly the goal to design and build supercomputers that are able to perform the equivalent of the combined computing capability of 1 million desktop computers. 40 years of Moore‘s law has enabled an exponential growth in computing capabilities. We reached Petascale computing 5 years ago, thus we set the Exascale target to the end of this decade. However, Moore‘s law has flattened. We can not simply increase frequency any longer. In fact, power is perhaps the single most important roadblock for Exascale. Scaling today‘s solutions, projects to a Gigawatt of needed power. We will discuss new computing paradigms that focus on drastic reduction of data movement and a careful combination of exact and inexact computing. We will show that there is plenty of room for improvement in algorithms and S/W to help tear down the power wall for Exascale computing.

Dr. Costas Bekas is a senior researcher in the Computational Sciences Group at IBM Research - Zurich. He received B. Eng., Msc and PhD degrees, all from the Computer Engineering & Informatics Department, University of Patras, Greece, in 1998, 2001 and 2003 respectively. From 2003-2005, he worked as a postdoctoral associate with Prof. Yousef Saad at the Computer Science & Engineering Department, University of Minnesota, USA. Costas‘s main focus is in massively parallel systems and their impact in everyday life, science and business. His research agenda spans numerical and combinatorial algorithms, energy-aware and fault tolerant systems/methods and computational science. Dr. Bekas brings more than 10 years of experience in scientific and high performance computing. During recent years he has been very active in the field of energy- aware HPC, proposing and publishing new energy-aware performance metrics and demonstrating their impact in HPC and large scale industrial problems. Dr. Bekas has been a strong advocate of energy-aware HPC, serving at the PC of the Ena-HPC conference series, organising relevant sessions in key conferences. Dr Bekas is a recipient of the 2012 PRACE Award and a Gordon Bell finalist at SC 2013.

Seminar - SWITCHaai - Eine föderierte Infrastruktur zur Autorisierung, ihre Herausforderungen und Chancen

Der Vortrag gibt einen Überblick über die Authentisierungs- und Autorisierungsinfrastruktur (AAI) der Schweizer Hochschulen. Die AAI in der Schweiz ist eine etablierte Infrastruktur in der Schweizer Hochschullandschaft, welche bei Web Browser basierten Anwendungen benutzt wird. Der Vortrag geht ein auf die Funktionsweise, den Aufbau, Anwendungen, die Governance und zukünftige Chancen und Herausforderungen der AAI.

Speaker: Patrik Schnellmann ist Bankfachmann und hat Informatik an der Universität Bern studiert. Nachdem er Erfahrungen in der Finanzindustrie und Bundesverwaltung gesammelt hatte, stiess er 2004 zu SWITCH, dem Netzwerk für Forschung und Lehre in der Schweiz (das schweizerische ‚DFN‘). Seit 2012 widmet er sich der Geschäftsentwicklung bei SWITCH und bildet er sich an der ETH Zürich beim Studiengang „Master of Advanced Studies in Management, Technologie und Wirtschaft“ (MAS-MTEC) weiter.

Seminar - Computing Challenges in Gravitational Wave Search

As gravitational wave signals are rare and deeply buried in the noise of the detector their detection will require extraordinary large computing resources and very efficient data analysis algorithms. The lecture will discuss These problems.

Speaker: Andrzej Krolak is a professor at Institute of Mathematics, Polish Academy of Sciences and at the National Center for Nuclear Studies in Warsaw. He is a relativist by training specializing in gravitational wave data analysis. He is a leader of the Polish member group of the Virgo gravitational wave detector project and a member of the board of this project. He is a Co-Chairman of the joint LIGO Scientific Collaboration and Virgo Collaboration group that searches for gravitational radiation from spinning neutron stars. He has spent a number of years abroad as a visitor, among others in Max Planck Institute for Gravitational Physics in Potsdam and Hannover and in Jet Propulsion Laboratory in Pasadena, California.

Seminar - Windows Server 2012 - Die Neuerungen im Überblick

Das neue Serverbetriebssystem von Microsoft, Server 2012, ist da. Seit wenigen Tagen ist die finale Version verfügbar.

Höchste Zeit also, sich einen Überblick zu verschaffen, was alles an Neuerungen und Verbesserungen drin

steckt. Lohnt es sich, gleich auf Server 2012 umzusteigen, oder reicht auch ein Windows Server 2008 R2 noch

aus? Für welche Einsatzzwecke und Szenarien empfiehlt es sich, auf die neue Version zu setzen?

Gezeigt wird ein Überblick über die Neuerungen, insbesondere in den Bereichen Active Directory und System-

Management, sowie Verbesserungen bei Themen wie Direct Access, Branch Cache etc.

Kolloquium - No bits? – Implementing Brain derived Computing

Modern computing is based on the fundamental works of Boole, Shannon, Turing and von Neumann. They provided the mathematical, theoretical, conceptual and architectural foundation for the information age. Already Turing pointed out that the brain is an information processing system and conjectured that it might be possible to synthesize its functions. With the advancements in neuroscience and information technology several research projects worldwide try to use this knowledge to build brain inspired computing devices that have the potential to address the 3 critical areas of modern computing: The energy problem, the reliability problem and the software problem. The lecture will introduce the research field of Brain inspired Computing, present recent results and try to provide an outlook to the future.

Seminar - Interoperability Reference Model Design for e-Science Infrastructures

The term e-science evolved as a new research field that focuses on collaboration in key areas of science using

next generation data and computing infrastructures (i.e. e-science infrastructures) to extend the potential of scientific

computing. More recently, increasing complexity of e-science applications that embrace multiple physical models (i.e. multi-physics) and consider a larger range of scales (i.e. multi-scale) is creating a steadily growing demand for world-wide interoperable infrastructures that allow for new innovative types of e-science by jointly using different kinds of e-science infrastructures. This talk presents an infrastructure interoperability reference model (IIRM) design tailored to production needs and that represents a trimmed down version of the Open Grid Service Architecture (OGSA) in terms of functionality and complexity (i.e. based on lessons learned from TCP/IP vs. ISO/OSI), while on the other hand being more specifically useful for production and thus easier to implement

Speaker: Morris Riedel studied ‚Computer Science‘ at the University of Hagen and received his master degree in 2007. He worked in EU Research Projects such as UniGrids, OMII-Europe, DEISA2, and is acting currently as Strategic Director of the European Middleware Initiative (EMI). More recently, he was appointed as one of four architects of the US XSEDE infrastructure as the only European member where he works closely together with SW-Engineering experts from Carnegie Mellon University. He also leads the ‚data replication‘ activities in the EU Data Infrastructure EUDAT. Over the years, he gained considerable profile as an international expert in e-Science infrastructure interoperability using sw-engineering principles.

Seminar - Future of Multi- and Many-core Job Submission in LHCb