Ongoing Projects

VirtMat Tools for FAIR and Interdisciplinary Research softwarE - VM-FIRE

since 2026-01-01 - 2026-12-31

Modern research increasingly depends on computational methods, making adherence to the FAIR principles (Findable, Accessible, Interoperable, Reusable) essential for transparency, reproducibility, and long-term usability. The VM-FIRE project tackles this challenge by evolving the VirtMat Tools software suite—originally designed for materials science—into a versatile, user-friendly platform for simulations across disciplines.

VM-FIRE’s mission is to lower barriers to reproducible and efficient research while ensuring accessibility for users without deep computational expertise. In collaboration with the Jülich Supercomputing Center, the project will introduce key technical enhancements such as checkpointing, restart capabilities, modularization, and improved data storage. It will also strengthen compliance with FAIR and FAIR4RS principles by refining metadata and integrating persistent identifiers.

Beyond technical improvements, VM-FIRE emphasizes community building. Through workshops, tutorials, and collaborative channels, the project aims to create an active user-developer ecosystem that drives adoption and continuous innovation.

Finally, deploying VirtMat Tools at a Tier-1 high-performance computing center will showcase its scalability and interdisciplinary potential—supporting the Helmholtz Association’s vision of sustainable research software that fosters scientific innovation and knowledge transfer.

RenewBench

since 2026-01-01 - 2026-12-31

RenewBench is the first unified, open global benchmark for renewable energy data, designed to accelerate energy meteorological AI model development. By unifying open renewable electricity generation data from around the globe with global meteorological data, RenewBench will enable rigorous, scalable evaluation of energy meteorological AI models. The dataset will include rich metadata in the STAC format and be accessible via a fully documented Python package supporting PyTorch integration and automated evaluation. Automated benchmarking pipelines will focus initially on forecasting tasks, across varying spatial and temporal scales, however RenewBench will foster the development and evaluation of generalizable and trustworthy AI models for a variety of tasks in energy meteorology.

Tackling Challenges in Time-to-Event Analyses via Boosting Distributional Copula Regression

since 2026-01-01 - 2028-12-31

Survival and other time-to-event outcomes are among the most prominent endpoints in clinical trials. Biostatistical methods for analyzing such data differ significantly from conventional techniques due to the presence of censoring, which occurs when the exact time of an event is unknown. Classical methods for time-to-event data often incur assumptions that make it difficult to accurately model the data generating process and can lead to incorrect conclusions drawn from the data. Beyond addressing censoring, it is also important to identify relevant or informative factors for the respective event time endpoints and to understand their dependence structure. This project is concerned with developing sophisticated regression and variable selection techniques tailored to the joint analysis of complex data structures where the outcome of interest is comprised of multiple, potentially inter-dependent time-to-event or survival endpoints. We devise advanced copula-based approaches for high-dimensional datasets in order to tackle biomedical research questions related to time-to-event endpoints. The proposed methods shall yield interpretable models of potentially complex association and dependence structures as well as high predictive performance. All of the developed methods will be implemented in freely available software in order to broaden the applicability as well as to foster transparent and reproducible research.

Bayesian inversion for hybrid deterministic-stochastic kinetic solvers

since 2025-06-01 - 2027-05-31

Kinetic equations are a core modelling tool across many domains of science and engineering, including fusion reactor design, radiation therapy planning and nuclear waste analysis. These equations model particle dynamics in a position-velocity phase space, whose high-dimensionality makes grid-based discretization expensive in practice. Often, one therefore uses particle-based Monte Carlo methods for their simulation. These methods have the drawback of producing simulation results with a stochastic sampling error, due to tracing a finite number of particles. The stochastic nature of this error presents challenges when performing, e.g., parameter estimation where one wishes to find the correct solver inputs to produce a simulation result that matches a measurement under given assumptions on measurement noise. Applying a Bayesian framework to such estimation problems, one assumes a prior distribution on the parameters to be identified. One then aims to compute a corresponding posterior distribution that takes into account how likely it is that the solver output for a given parameter value matches the provided measurement. In this project we consider sampling methods for evaluating this posterior, such as Markov chain Monte Carlo methods and ensemble Kalman inversion. The theory for these methods does in general not apply unchanged when using particle-based Monte Carlo solvers to evaluate the likelihood. We study how these methods perform in combination with such solvers and develop new robust and efficient variants of these methods to deal with such stochastic solvers. We develop these methods on mathematical toy problems and then extend their application to practical problems within nuclear fusion research and other relevant domains.

Simulierte Welten (Phase V)

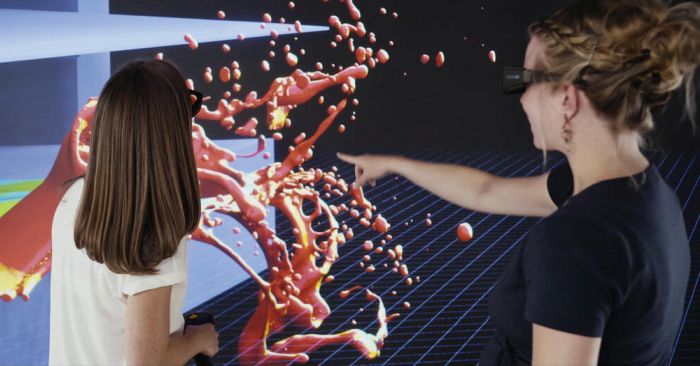

We encounter simulations unconsciously in many everyday situations: the daily weather forecast, non-destructive crash tests for car approval, lightweight and material-saving plastic parts in household appliances, or investment strategies for funds and pension investors...

An interdisciplinary team has set itself the task of bringing the topics of simulation, mathematical modelling, and artificial intelligence to schools. How do we recognise simulations? How are the results to be understood? And what do employees in data centres work on? How does AI work? Simulated Worlds answers these questions with many practical examples.

Kubernetes evaluation (Kube 3) - Kube3

since 2025-04-01 - 2027-08-31

Kubernetes is becoming relevant as a scaling infrastructure technology for more and more services. The Kubernetes evaluation project (Kube 3) focuses on the further development of cloud infrastructures in the state of Baden-Württemberg for the provision of Kubernetes. This also supports existing approaches in current bw projects, including bwGitLab, bwJupyter and bwCloud. The aim of the project is to develop and implement the necessary concepts and methods as well as forms of cross-university collaboration and to test them with specific use cases.

More

Spatial climate variability patterns reconstructed with Bayesian Hierarchical Learning

since 2025-02-01 - 2029-01-31

The project aims to reconstruct spatial patterns of timescale-dependent climate variability. For that a Bayesian Hierarchical Model will be developed that incorporates a variety of proxy data while considering proxy processes and noise. It aims to quantify limitations and uncertainties of derived climate variability reconstructions related to the covariance structure used and the sparseness, spatial heterogeneity and noisiness of the observational data through Bayesian posterior distributions. We will use the climate variability map to investigate regional patterns of low-frequency variability and the corresponding implications e.g. for the range of possible future climate trends in natural variability and of the frequency of extreme events.

The project is supported by Helmholtz Einstein International Berlin Research School in Data Science (HEIBRiDS) and co-supervised by Prof. Dr. Thomas Laepple from Alfred Wegener Institute (AWI) and Prof. Dr. Tobias Krüger from the Humboldt University.

Structured explainability for interactions in deep learning models applied to pathogen phenotype prediction

Explaining and understanding the underlying interactions of genomic regions are crucial for proper pathogen phenotype characterization such as predicting the virulence of an organism or the resistance to drugs. Existing methods for classifying the underlying large-scale data of genome sequences face challenges with regard to explainability due to the high dimensionality of data, making it difficult to visualize, access and justify classification decisions. This is particularly the case in the presence of interactions, such as of genomic regions. To address these challenges, we will develop methods for variable selection and structured explainability that capture the interactions of important input variables: More specifically, we address these challenges (i) within a deep mixed models framework for binary outcomes fusing generalized linear mixed models and a deep variant of structured predictors. We thereby combine statistical logistic regression models with deep learning for disentangling complex interactions in genomic data. We particularly enable estimation when no explicitly formulated inputs are available for the models, as for instance relevant with genomics data. Further, (ii), we will extend methods for explainability of classification decisions such as layerwise relevance propagation to explain these interactions. Investigating these two complementary approaches on both the model and explainability levels, it is our main objective to formulate and postulate structured explanations that not only give first-order, single variable explanations of classification decisions, but also regard their interactions. While our methods are motivated by our genomic data, they can be useful and extended to other application areas in which interactions are of interest.

Probabilistic learning approaches for complex disease progression based on high-dimensional MRI data

This project proposes informed, data-driven methods to reveal pathological trajectories based on high-dimensional medical data obtained from magnetic resonance imaging (MRI), which are relevant as both inputs and outputs in regression equations to adequately perform early diagnosis and to model, understand, and predict actual and future disease progression. For this, we will fuse deep learning (DL) methods with Bayesian statistics to (1) accurately predict the complete outcome distributions of individual patients based on MRI data and further confounders and covariates (such as clinical or demographical variables) to adequately quantify uncertainty in predictions in contrast to point predictions not delivering any measures of confidence (2) model temporal dynamics in biomedical patient data. Regarding (1), we will develop deep distributional regression models for image inputs to accurately predict the entire distributions of the different disease scores (e.g. symptom severity), which can be multivariate and are typically highly non-normally distributed. Regarding (2), we will model the complex temporal evolution in neurological diseases by developing DL-based state-space models. Neither model is tailored to a specific disease, but both will be exemplary developed and tested for two neurological diseases, namely Alzheimer’s disease (AD) and multiple sclerosis (MS), chosen for their different disease progression profiles.

Joint Lab HiRSE: Helmholtz Information - Research Software Engineering

The Joint Lab HiRSE and HiRSE concept see the establishment of central activities in RSE and the targeted sustainable funding of strategically important codes by so-called Community Software Infrastructure (CSI) groups as mutually supportive aspects of a single entity.

In a first “preparatory study”, HiRSE_PS evaluated the core elements of the HiRSE concept and their interactions in practice over the funding period of three years (2022 to 2024). The two work packages in HiRSE_PS for one dealt with the operation of CSI groups, in particular also for young codes, and with consulting and networking. The goal of the preparatory study was the further refinement of the concept, which can then be rolled out to the entire Helmholtz Information, or, if desired, to the entire Helmholtz Association with high prospects of success and high efficiency.

The Joint Lab HiRSE follows on the preparatory study, continuing the incorporation of successful elements and extending it with the evaluatioon of the integration of additional CSI groups.

The philosophical reception of the Bible in the Middle Ages and its interpretation in the "longue durée"

Within the project, medieval textual witnesses from the rich commentary literature of the Gospel of John will be extensively transcribed, edited and enriched with additional metadata. In addition to a research data repository, various search, annotation, analysis and visualization services adapted to the project's research questions will be provided. In this way, approaches from qualitative and quantitative research will be brought together and enriched by additional methods.

bwRSE4HPC

In order to implement a sustainable and professional approach to the development and management of energy-optimized research software, suitable support structures and services operated by highly specialized research software engineers are required. This is where the new state service bwRSE4HPC comes in.

Verbundprojekt CausalNet - A flexible, robust, and efficient framework for integrating causality into machine learning models

Existing machine learning (ML) models typically on correlation, but not causation. This can lead to errors, bias, and eventually suboptimal performance. To address this, we aim to develop novel ways to integrate causality into ML models. In the project CausalNet, we advance causal ML toward flexibility, efficiency, and robustness: (1) Flexibility: We develop a general-purpose causal ML model for high-dimensional, timeseries, and multi-modal data. (2) Efficiency: We develop techniques for efficient learning algorithms (e.g., synthetic pre-training, transfer learning, and few-shot learning) that are carefully tailored to causal ML. (3) Robustness: We create new environments/datasets for benchmarking. We also develop new techniques for verifying and improving the robustness of causal ML. (4) Open-source: We fill white spots in the causal ML toolchain to improve industry uptake. (5) Real-world applications: We demonstrate performance gains through causal ML in business, public policy, and bioinformatics for scientific discovery.

Distributional Copula Regression for Space-Time Data

Distributional copula regression for space-time data develops novel models for multivariate spatio-temporal data using distributional copula regression. Of particular interest are tests for the significance of predictors and automatic variable selection using Bayesian selection priors. In the long run, the project will consider computationally efficient modeling of non-stationary dependencies using stochastic partial differential equations.

DFG-priority program 2298 Theoretical Foundations of Deep Learning

The goal of this project is to use deep neural networks as building blocks in a numerical method to solve the Boltzmann equation. This is a particularly challenging problem since the equation is a high-dimensional integro-differential equation, which at the same time possesses an intricate structure that a numerical method needs to preserve. Thus, artificial neural networks might be beneficial, but cannot be used out-of-the-box.

We follow two main strategies to develop structure-preserving neural network-enhanced numerical methods for the Boltzmann equation. First, we target the moment approach, where a structure-preserving neural network will be employed to model the minimal entropy closure of the moment system. By enforcing convexity of the neural network, one can show, that the intrinsic structure of the moment system, such as hyperbolicity, entropy dissipation and positivity is preserved. Second, we develop a neural network approach to solve the Boltzmann equation directly at discrete particle velocity level. Here, a neural network is employed to model the difference between the full non-linear collision operator of the Boltzmann equation and the BGK model, which preserves the entropy dissipation principle. Furthermore, we will develop strategies to generate training data which fully sample the input space of the respective neural networks to ensure proper functioning models.

Countrywide service bwGitLab

since 2024-07-01 - 2029-06-30

Based on the GitLab software, a state service for the administration, versioning and publication of software repositories for universities in Baden-Württemberg is being created as part of the IT alliance. GitLab also offers numerous possibilities for collaborative work and has extensive functionalities for software development and automation. The service enables or simplifies cross-location development projects between universities and with external partners, new possibilities in the field of research data management and can be used profitably in teaching. It also creates an alternative to cloud services such as GitHub and makes it easier to keep data from research and teaching in Baden-Württemberg.

Smart weather predictions for the 21st century through probabilistic AI-models initialized with strom-resolving climate projections - SmartWeather21

since 2024-05-01 - 2027-04-30

The ongoing warming of the earth's climate due to man-made climate change is fundamentally changing our weather. Traditionally, weather forecasts have been made based on numerical models, so-called Numerical Weather Predictions (NWP). Data-driven machine learning models and in particular deep neural networks offer the potential as surrogate models for fast and (energy) efficient emulation of NWP models. As part of the SmartWeather21 project, we want to investigate which DL architecture for NWP is best suited for weather forecasting in a warmer climate, based on the high-resolution climate projections generated with ICON as part of WarmWorld. To incorporate the high resolutions of the WarmWorld climate projections, we will develop data- and model-parallel approaches and architectures for weather forecasting in a warmer climate. Furthermore, we will investigate which (learnable combinations of) variables from the ICON climate projections provide the best, physically plausible forecast accuracy for weather prediction in a warmer climate. With this in mind, we develop dimension reduction techniques for the various input variables that learn a latent, lower-dimensional representation based on the accuracy of the downstream weather forecast as an upstream task. The increased spatial resolution of the ICON simulations also allows conclusions to be drawn about the uncertainties of individual input and output variables at lower resolutions. As part of SmartWeather21, we will develop methods that parameterise these uncertainties using probability distributions and use them as input variables with lower spatial resolution in DL-based weather models. These can be propagated through the model as part of probabilistic forecasts.

EOSC Beyond

since 2024-04-01 - 2027-03-31

EOSC Beyond overall objective is to advance Open Science and innovation in research in the context of the European Open Science Cloud (EOSC) by providing new EOSC Core capabilities allowing scientific applications to find, compose and access multiple Open Science resources and offer them as integrated capabilities to researchers

bwNET2.0

Resilient, powerful and flexible network operation and the provision of individualized and secure network services are at the core of bwNET2.0. The aim is to contribute to fundamental research in the field of autonomous networks on the one hand and to design and implement concrete building blocks and mechanisms that can be used to further develop BelWü and campus networks on the other.

AI-enhanced differentiable Ray Tracer for Irradiation-prediction in Solar Tower Digital Twins - ARTIST

Solar tower power plants play a key role in facilitating the ongoing energy transition as they deliver dispatchable climate neutral electricity and direct heat for chemical processes. In this work we develop a heliostat-specific differentiable ray tracer capable of modeling the energy transport at the solar tower in a data-driven manner. This enables heliostat surface reconstruction and thus drastically improved the irradiance prediction. Additionally, such a ray tracer also drastically reduces the required data amount for the alignment calibration. In principle, this makes learning for a fully AI-operated solar tower feasible. The desired goal is to develop a holistic AI-enhanced digital twin of the solar power plant for design, control, prediction, and diagnosis, based on the physical differentiable ray tracer. Any operational parameter in the solar field influencing the energy transport may be, optimized with it. For the first time gradient-based, e.g., field design, aim point control, and current state diagnosis are possible. By extending it with AI-based optimization techniques and reinforcement learning algorithms, it should be possible to map real, dynamic environmental conditions with low-latency to the twin. Finally, due to the full differentiability, visual explanations for the operational action predictions are possible. The proposed AI-enhanced digital twin environment will be verified at a real power plant in Jülich. Its inception marks a significant step towards a fully automatic solar tower power plant.

Authentication and Authorisation for Research Collaboration Technical Revision to Enhance Effectiveness - AARC TREE

Collaboration and sharing of resources is critical for research. Authentication and Authorisation Infrastructures (AAIs) play a key role in enabling federated interoperable access to resources.

The AARC Technical Revision to Enhance Effectiveness (AARC TREE) project takes the successful and globally recognised “Authentication and Authorisation for Research Collaboration” (AARC) model and its flagship outcome, the AARC Blueprint Architecture (BPA), as the basis to drive the next phase of integration for research infrastructures: expand federated access management to integrate user-centring technologies, expand access to federated data and services (authorisation), consolidating existing capacities and avoiding fragmentation and unnecessary duplication.

SCCs participates in AARC-TREE to continue developing the Blueprint Architectures. Here we contribute to technical recommendations, as well as to policy development. Since SCC is also a core member of the IAM project of the german NFDI, we can raise the awareness of NFDI requirements in AARC, as well as feed new developments back to NFDI, in a very timely manner.

Holistic Imaging and Molecular Analysis in life-threatening Ailments - HIMALAYA

since 2024-02-01 - 2027-01-31

The overall goal of this project is to improve the radiological diagnosis of human prostate cancer in clinical MRI by AI-based exploitation of information from higher resolution modalities. We will use the brilliance of HiP-CT imaging at beamline 18 and an extended histopathology of the entire prostate to optimise the interpretation of MRI images in the context of a research prototype. In parallel, the correlation of the image data with the molecular properties of the tumours is planned for a better understanding of invasive tumour structures. An interactive multi-scale visualisation across all modalities forms the basis for vividly conveying the immense amounts of data. As a result, at the end of the three-year project phase, the conventional radiological application of magnetic resonance imaging (MRI) is to be transferred into a diagnostic standard that also reliably recognises patients with invasive prostate tumours that have often been incorrectly diagnosed to date, taking into account innovative AI algorithms. In the medium term, a substantial improvement in the care of patients with advanced prostate carcinoma can therefore be expected. In addition, we will make the unique multimodal data set created in the project, including visualisation tools, available as open data to enable further studies to better understand prostate cancer, which could potentially lead to novel diagnostic and therapeutic approaches.

bwHPC-S5: Scientific Simulation and Storage Support Services Phase 3 - bwHPC-S5 Phase 3

Zusammen mit den gewonnenen Erkenntnissen, Bewertungen und Empfehlungen sollen die aktuellen Herausforderungen und definierten Handlungsfelder des Rahmenkonzepts der Universitäten des Landes Baden-Württemberg für das HPC und DIC im Zeitraum

2025 bis 2032 durch folgende Maßnahmen im Projekt konkretisiert werden:

• Weiterentwicklung der Wissenschaftsunterstützung bzgl. Kompetenzen zur Unterstützung neuartiger

System- und Methodekonzepte (KI, ML oder Quantencomputing), Vernetzung mit Methodenfor-

schung, ganzheitliche Bedarfsanalysen und Unterstützungsstrategien (z.B. Onboarding)

• Steigerung der Energieeffizienz durch Sensibilisierung sowie Untersuchung und Einsatz neuer Be-

triebsmodelle und Workflows inkl. optimierter Software

• Erprobung und flexible Integration neuer Systemkomponenten und -architekturen, Ressourcen (z.B.

Cloud) sowie Virtualisierung- und Containerisierungslösungen

• Umsetzung neue Software-Strategien (z.B. Nachhaltigkeit und Entwicklungsprozesse)

• Ausbau der Funktionalitäten der baden-württembergischen Datenföderation (z.B. Daten-Transfer-

Service)

• Umsetzung von Konzepten beim Umgang mit sensiblen Daten und zur Ausprägung einer digitalen

Souveränität

• Vernetzung und Kooperation mit anderen Forschungsinfrastrukturen

bwCloud3

The bwCloud has been an established and well-received state service since 2018, which meets the demand for interactive, high-performance, resource-intensive infrastructure as a service services.

The bwCloud3 project aims to add explicit service components to this existing infrastructure as a service service and thus create a range of value-added services, such as a connection to campus management and learning management systems.

In addition, the aim is to improve the climate compatibility of the bwCloud through various measures, to ensure consistent IT security at a high level and to establish a business model. These goals are to be achieved over a project duration of four years, during which time the bwCloud is to be released into independent and long-term financing.

ICON-SmART

Contact: Dr. Jörg Meyer Funding: Hans-Ertel-Zentrum für Wetterforschung

“ICON-SmART” addresses the role of aerosols and atmospheric chemistry for the simulation of seasonal to decadal climate variability and change. To this end, the project will enhance the capabilities of the coupled composition, weather and climate modelling system ICON-ART (ICON, icosahedral nonhydrostatic model – developed by DWD, MPI-M and DKRZ with the atmospheric composition module ART, aerosols and reactive trace gases – developed by KIT) for seasonal to decadal predictions and climate projections in seamless global to regional model configurations with ICON-Seamless-ART (ICON-SmART).

Based on previous work, chemistry is a promising candidate for speed-up by machine learning. In addition, the project will explore machine learning approaches for other processes. The ICON-SmART model system will provide scientists, forecasters and policy-makers with a novel tool to investigate atmospheric composition in a changing climate and allows us to answer questions that have been previously out of reach.

Photonic materials with properties on demand designed with AI technology

This project uses artificial neural networks in an inverse design problem of finding nano-structured materials with optical properties on demand. Achieving this goal requires generating large amounts of data from 3D simulations of Maxwell's equations, which makes this a data-intensive computing problem. Tailored algorithms are being developed that address both the learning process and the efficient inversion. The project complements research in the SDL Materials Science on AI methods, large data sets generated by simulations, and workflows.

Artificial intelligence for the Simulation of Severe AccidentS - ASSAS

The ASSAS project aims at developing a proof-of-concept SA (severe accident) simulator based on ASTEC (Accident Source Term Evaluation Code). The prototype basic-principle simulator will model a simplified generic Western-type pressurized light water reactor (PWR). It will have a graphical user interface to control the simulation and visualize the results. It will run in real-time and even much faster for some phases of the accident. The prototype will be able to show the main phenomena occurring during a SA, including in-vessel and ex-vessel phases. It is meant to train students, nuclear energy professionals and non-specialists.

In addition to its direct use, the prototype will demonstrate the feasibility of developing different types of fast-running SA simulators, while keeping the accuracy of the underlying physical models. Thus, different computational solutions will be explored in parallel. Code optimisation and parallelisation will be implemented. Beside these reliable techniques, different machine-learning methods will be tested to develop fast surrogate models. This alternate path is riskier, but it could drastically enhance the performances of the code. A comprehensive review of ASTEC's structure and available algorithms will be performed to define the most relevant modelling strategies, which may include the replacement of specific calculations steps, entire modules of ASTEC or more global surrogate models. Solutions will be explored to extend the models developed for the PWR simulator to other reactor types and SA codes. The training data-base of SA sequences used for machine-learning will be made openly available. Developing an enhanced version of ASTEC and interfacing it with a commercial simulation environment will make it possible for the industry to develop engineering and full-scale simulators in the future. These can be used to design SA management guidelines, to develop new safety systems and to train operators to use them.

Identity & Access Management for the National Research Data Infrastructure - IAM4NFDI

Identity and Access Management (IAM) is concerned with the processes, policies and technologies for managing digital identities and their access rights to specific resources. Providing IAM as a basic serivce, IAM4NFDI is funded by Base4NFDI.

The task of the Basic Service IAM is to establish and provide a state-of-the-art AAI, that fosters cross-consortial and international collaboration.

A central goal of IAM4NFDI is therefore enable unified access to data, software, and compute resources, as well as sovereign data exchange and collaborative work for all NFDI consortia, as well as compatible initiatives. In order to achieve this, an extended version of the AARC Blueprint Architecture will be implemented an provided as a service to interested NFDI consortia.

This will enable researchers from different domains and institutions to access digital resources within and beyond NFDI. Users from approx. 400 German research and higher education institutions plus approx. 4800 home organisations worldwide will be able to access services and resources provided by the NFDI Community AAI. Also google, github, or ORCID accounts are enabled to access specific parts of the provided services.

All tools and solutions developed within IAM4NFDI are compatible with standards and the AARC Recommendations.

More

Data and services to support marine and freshwater scientists and stakeholders - AquaINFRA

The AquaINFRA project aims to develop a virtual environment equipped with FAIR multi-disciplinary data and services to support marine and freshwater scientists and stakeholders restoring healthy oceans, seas, coastal and inland waters. The AquaINFRA virtual environment will enable the target stakeholders to store, share, access, analyse and process research data and other research digital objects from their own discipline, across research infrastructures, disciplines and national borders leveraging on EOSC and the other existing operational dataspaces. Besides supporting the ongoing development of the EOSC as an overarching research infrastructure, AquaINFRA is addressing the specific need for enabling researchers from the marine and freshwater communities to work and collaborate across those two domains.

Materialized holiness - toRoll

In the project "Materialized Holiness" Torah scrolls are studied as an extraordinary codicological, theological, and social phenomenon. Unlike, for example, copies of the Bible, the copying of sacred scrolls has been governed by strict regulations since antiquity and is complemented by a rich commentary literature.

Together with experts in Jewish studies, materials research, and the social sciences, we would like to build a digital repository of knowledge that does justice to the complexity of this research subject. Jewish scribal literature with English translations, material analyses, paleographic studies of medieval Torah scrolls, as well as interview and film material on scribes of the present day are to be brought together in a unique collection and examined in an interdisciplinary manner for the first time. In addition, a 'virtual Torah scroll' to be developed will reveal minute paleographic details of the script and its significance in cultural memory.

Metaphors of Religion - SFB1475

The SFB 1475, located at the Ruhr-Universität Bochum (RUB), aims to understand and methodically record the religious use of metaphors across times and cultures. To this end, the subprojects examine a variety of scriptures from Christianity, Islam, Judaism, Zoroastrianism, Jainism, Buddhism, and Daoism, originating from Europe, the Near and Middle East, as well as South, Central, and East Asia, and spanning the period from 3000 BC to the present. For the first time, comparative studies on a unique scale are made possible through this collaborative effort.

Within the Collaborative Research Center, the SCC, together with colleagues from the Center for the Study of Religions (CERES) and the RUB, is leading the information infrastructure project "Metaphor Base Camp", in which the digital data infrastructure for all subprojects is being developed. The central component will be a research data repository with state-of-the-art annotation, analysis and visualization tools for the humanities data.

Translated with www.DeepL.com/Translator (free version)

Bayesian Machine Learning with Uncertainty Quantification for Detecting Weeds in Crop Lands from Low Altitude Remote Sensing

Weeds are one of the major contributors to crop yield loss. As a result, farmers deploy various approaches to manage and control weed growth in their agricultural fields, most common being chemical herbicides. However, the herbicides are often applied uniformly to the entire field, which has negative environmental and financial impacts. Site-specific weed management (SSWM) considers the variability in the field and localizes the treatment. Accurate localization of weeds is the first step for SSWM. Moreover, information on the prediction confidence is crucial to deploy methods in real-world applications. This project aims to develop methods for weed identification in croplands from low-altitude UAV remote sensing imagery and uncertainty quantification using Bayesian machine learning, in order to develop a holistic approach for SSWM. The project is supported by Helmholtz Einstein International Berlin Research School in Data Science (HEIBRiDS) and co-supervised by Prof. Dr. Martin Herold from GFZ German Research Centre for Geosciences.

PUNCH4NFDI

PUNCH4NFDI is the NFDI consortium of particle, astro-, astroparticle, hadron and nuclear physics, representing about 9.000 scientists with a Ph.D. in Germany, from universities, the Max Planck society, the Leibniz Association, and the Helmholtz Association. PUNCH physics addresses the fundamental constituents of matter and their interactions, as well as their role for the development of the largest structures in the universe - stars and galaxies. The achievements of PUNCH science range from the discovery of the Higgs boson over the installation of a 1 cubic kilometer particle detector for neutrino detection in the antarctic ice to the detection of the quark-gluon plasma in heavy-ion collisions and the first picture ever of the black hole at the heart of the Milky Way.

The prime goal of PUNCH4NFDI is the setup of a federated and "FAIR" science data platform, offering the infrastructures and interfaces necessary for the access to and use of data and computing resources of the involved communities and beyond. The SCC plays a leading role in the development of the highly distributed Compute4PUNCH infrastructure and is involved in the activities around Storage4PUNCH a distributed storage infrastructure for the PUNCH communities.

NFDI-MatWerk

The NFDI-MatWerk consortium receives five years of funding as part of the National Research Data Infrastructure (NFDI) for the development of a standardized platform for research data in materials science and engineering. In this specialist area, the physical mechanisms of materials are characterized in order to develop resource-saving materials with ideal properties for the respective applications. Using a knowledge graph-based infrastructure, it should be possible to access research data distributed throughout Germany in such a way that even search queries and evaluations on complex topics can be made quickly and easily accessible. At KIT, the Scientific Computing Center (SCC) and the Institute for Applied Materials (IAM) are involved. At the SCC, we are building the digital research environment with the NFDI-MatWerk partners.

Translated with DeepL.com (free version)

NFFA Europe Pilot - NEP

NEP stands for “Nanoscience Foundries & Fine Analysis Europe Pilot” and is a European infrastructure project. It provides important resources for nanoscience research and develops new cooperative working methods.

Comprehensive data management technologies and an open access data archive will make the research data FAIR and ensure interoperability with the European Open Science Cloud. In this project, the SCC is developing innovative tools for research data and metadata management, which are essential components of the NEP infrastructure. The SCC is also responsible for the “Virtual Access” work package. Translated with DeepL.com (free version)

Joint Lab VMD - JL-VMD

Within the Joint Lab VMD, the SDL Materials Science develops methods, tools and architectural concepts for supercomputing and big data infrastructures, which are tailored to tackle the specific application challenges and to facilitate the digitalization in materials research and the creation of digital twins. In particular, the Joint Lab develops a virtual research environment (VRE) that integrates computing and data storage resources in existing workflow managements systems and interactive environments for simulation and data analyses.

Joint Lab MDMC - JL-MDMC

As part of the Joint Lab “Integrated Model and Data Driven Materials Characterization” (MDMC), the Simulation Data Laboratory (SDL) for Materials Science is developing a concept for a data and information platform. This platform is intended to make data on materials available in a knowledge-oriented manner as an experimental basis for digital twins on the one hand and for the development of simulation-based methods for predicting material structure and properties on the other. The platform defines a metadata model for the description of samples and data sets from experimental measurements. In addition, data models for material simulation and correlative characterization are harmonized using materials science vocabularies and ontologies. Translated with DeepL.com (free version)

Regression Models Beyond the Mean – A Bayesian Approach to Machine Learning

Recent progress in computer science has led to data structures of increasing size, detail and complexity in many scientific studies. In particular nowadays, where such big data applications do not only allow but also require more flexibility to overcome modelling restrictions that may result in model misspecification and biased inference, further insight in more accurate models and appropriate inferential methods is of enormous importance. This research group will therefore develop statistical tools for both univariate and multivariate regression models that are interpretable and that can be estimated extremely fast and accurate. Specifically, we aim to develop probabilistic approaches to recent innovations in machine learning in order to estimate models for huge data sets. To obtain more accurate regression models for the entire distribution we construct new distributional models that can be used for both univariate and multivariate responses. In all models we will address the issues of shrinkage and automatic variable selection to cope with a huge number of predictors, and the possibility to capture any type of covariate effect. This proposal also includes software development as well as applications in natural and social sciences (such as income distributions, marketing, weather forecasting, chronic diseases and others), highlighting its potential to successfully contribute to important facets in modern statistics and data science.

Helmholtz Metadata Collaboration Platform - HMC

The overarching goal of the Helmholtz Metadata Collaboration Platform is to promote the qualitative enrichment of research data through metadata in the long term, to support researchers - and to implement this in the Helmholtz Association and beyond. With the FAIR Data Commons Technologies work package, the SCC is developing technologies and processes to make research data from all research areas of the Helmholtz Association available and to provide researchers with easy access in accordance with the FAIR principles. This is achieved on a technical level through standardized interfaces that are based on recommendations and standards developed within globally networked research data initiatives, e.g. the Research Data Alliance (RDA, https://www.rd-alliance.org/). For researchers, these interfaces are made usable through easy-to-use tools, generally applicable processes and recommendations for handling research data in everyday scientific work. Translated with www.DeepL.com/Translator (free version)

Helmholtz AI

The Helmholtz AI Platform is a research project of the Helmholtz Incubator "Information & Data Science". The overall mission of the platform is the "democratization of AI for a data-driven future" and aims at making AI algorithms and approaches available to a broad user group in an easy-to-use and resource-efficient way.

(Translated with DeepL.com)

More

RTG 2450 - GRK 2450 (DFG)

In the Research Training Group (RTG) "Tailored Scale-Bridging Approaches to Computational Nanoscience" we investigate problems, that are not tractable by computational chemistry standard tools. The research is organized in seven projects. Five projects address scientific challenges such as friction, materials aging, material design and biological function. In two further projects, new methods and tools in mathematics and computer science are developed and provided for the special requirements of these applications. The SCC is involved in projects P4. P5 and P6.

Helmholtz Federated IT Services - HIFIS

Helmholtz Federated IT Services (HIFIS) establishes a secure and easy-to-use collaborative environment with ICT services that are efficient and accessible from anywhere. HIFIS also supports the development of research software with a high level of quality, visibility and sustainability.

CRC 1173 Wave phenomena

Waves are everywhere, and understanding their behavior leads us to understand nature. The goal of CRC 1173 »Wave Phenomena« is therefore to analytically understand, numerically simulate, and eventually manipulate wave propagation under realistic scenarios by intertwining analysis and numerics.

Computational and Mathematical Modeling Program - CAMMP

CAMMP stands for Computational and Mathematical Modeling Program. It is an extracurricular offer of KIT for students of different ages. We want to make the public aware of the social importance of mathematics and simulation sciences. For this purpose, students actively engage in problem solving with the help of mathematical modeling and computer use in various event formats together with teachers. In doing so, they explore real problems from everyday life, industry or research.